Why Are My Core Web Vitals Stats Taking So Long to Update?

Every now and then, Google does something that changes the face of SEO forever. Sometimes it's a major algorithm update like Panda, Penguin, or Hummingbird. Sometimes it's less visible, like more or less ignoring meta descriptions, despite recommending that people fill them out. Sometimes it's adding new metrics to the pile of things they monitor, like PageSpeed Insights.

Core Web Vitals is one of that last category. These web vitals are "new" metrics that Google has been developing over the last year or two, and finally rolled out as a public metric they monitor and show you in your Google Search Console.

So what are the Core Web Vitals, what do they mean, and why do they take so long in your Search Console dashboard to update when things change?

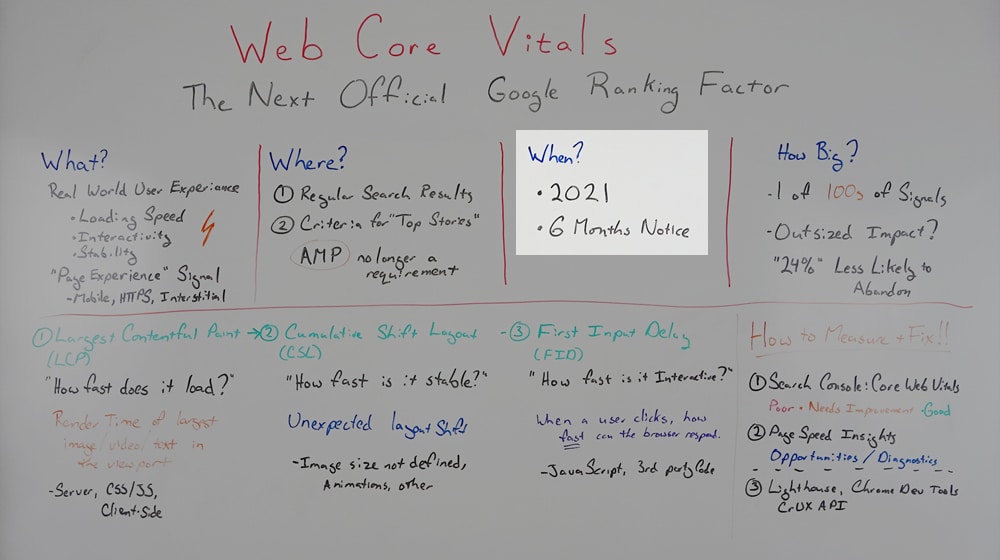

What Are the Core Web Vitals?

To put things in layman's terms, the Core Web Vitals are a series of metrics Google developed that are meant to analyze the experience of using a webpage. It's all about usability, about how it feels to use a page, and about how quickly the page is responsive and stable for use.

Basically, you can divide up Google's ranking factors into two categories: the objective metrics and the experiential metrics.

Objective metrics are more or less "yes/no question" style metrics. Things like "does the page have a version for mobile users" and "does the page spam keywords" and "does the page use HTTPS" are objective metrics. You either meet them or you don't, or you meet certain levels of compliance with them.

Experiential metrics are more subjective and relate to how users actually use a site. PageSpeed is a big one here; the faster a page loads, the more users will use it. The slower a page loads, the more users will bounce to find a better site to give them what they're looking for.

Core Web Vitals basically took a deep look at those initial moments of a page loading and identified three specific key moments that define how users feel about using a site. It's actually very clever, and they aren't metrics I would have intuitively thought about, but they're very important when you know what they are.

So what actually are the Core Web Vitals? There are three specific signals. All three of them have to do with the initial load order and process of your website when a user visits it from a search result. They're essentially subsets of page speed.

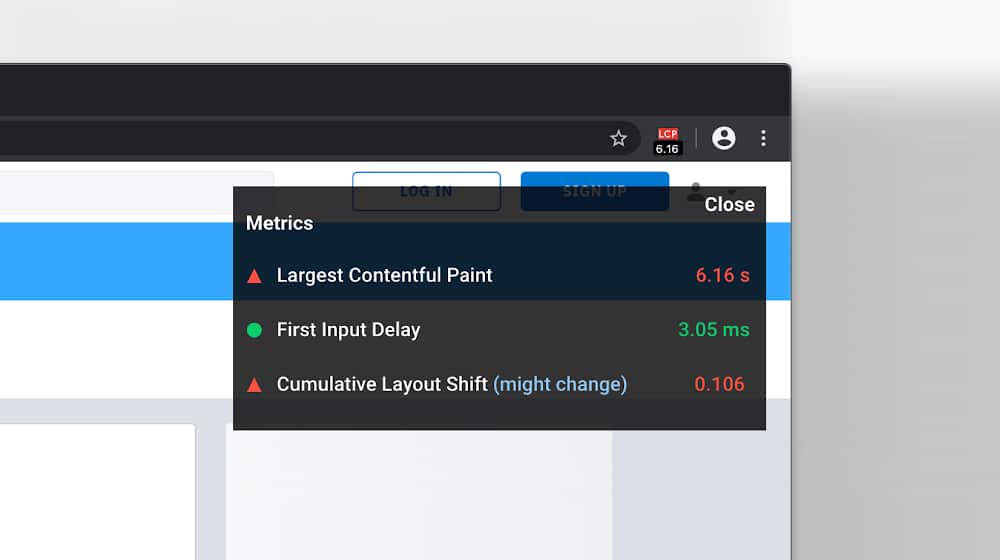

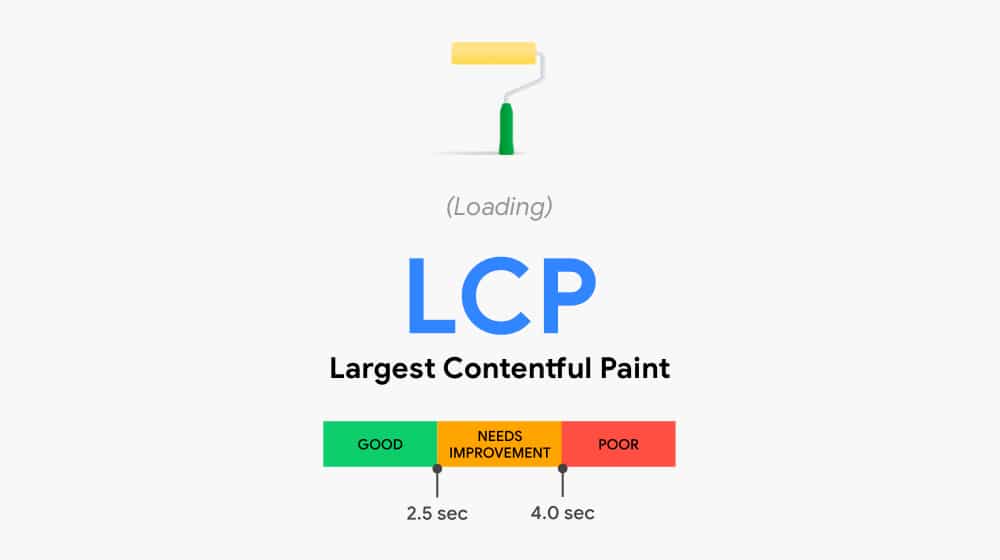

LCP: Largest Contentful Paint. This is basically "how fast does the page load?" Google looks for the largest thing in the viewport of your site when it loads, whether that's a huge image across the top, the primary content of the page, a heavily featured video, or whatever else. The "largest" piece of content. How long, from the first click, does it take for that largest element to be painted on the viewport and visible to users?

All three of these metrics are measured in one of three categories. They're either Good, Needs Improvement, or Poor. Obviously, you want to reach Good for all of them.

For LCP, "Good" is under 2.5 seconds, "Needs Improvement" is between 2.5 and 4 seconds, and "Poor" is over 4 seconds. Now, a load time under 2.5 seconds is already emphasized by PageSpeed Insights, which measures in milliseconds, so this should be relatively easy to meet.

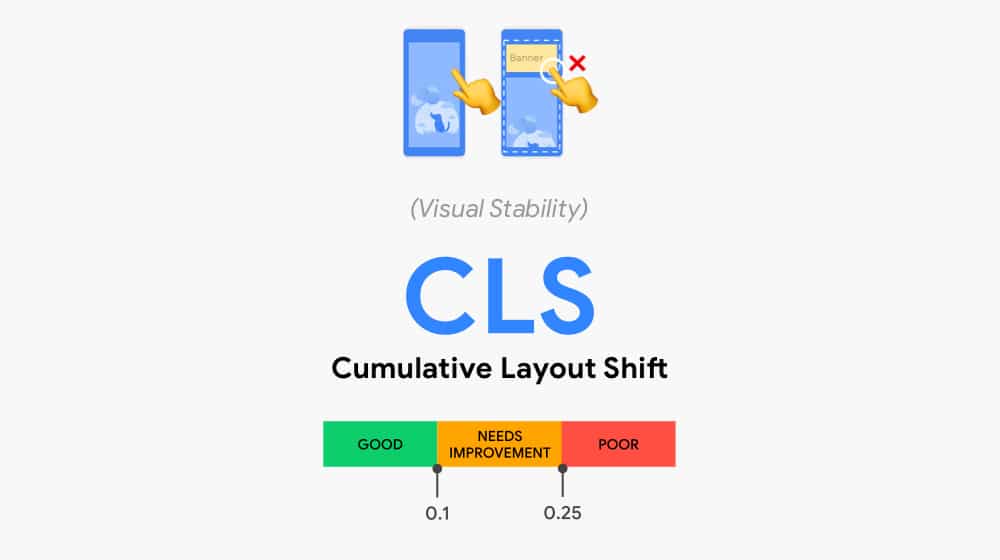

CLS: Cumulative Layout Shift. Have you ever loaded a page and when you go to tap something, while it looks like the page is loaded, the page or the button suddenly shifts? Some image or element was lazy loaded at the top of the page and pushed everything down, or a new bar showed up, or a shutter scrolled down, or your CSS loads out of order - the point is, you went to interact with the page and had that an interaction disrupted because of some element causing the page to shift.

CLS measures how long it takes for everything to become more or less stable. This is measured in a somewhat complicated and technical way, so telling you the specific numbers you need to meet is meaningless without context. Here they are anyway: "Good" is under .1, "Needs Improvement" is between .1 and .25, and "Poor" is greater than .25. What precisely that means, well, you'll need to click through that link up there and read to really understand it.

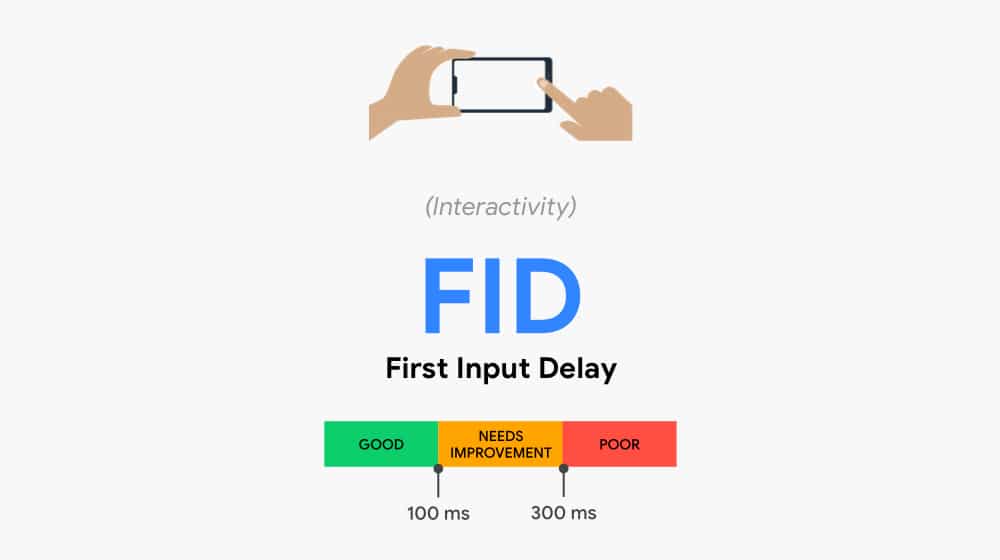

FID: First Input Delay. To put this one simply, "how long before you can interact with the page?" How long does it take from the time your page starts loading to the time a user can click on a button and expect a result? We've all ended up on pages where we know exactly what we want to do, but we have to wait before a button will respond because the rest of the page is still loading or processing scripts or rendering content. Google wants you to make your page more interactive, faster, so they measure the delay before first input.

In this case, "Good" is under 100 milliseconds, "Needs Improvement" is between 100 and 300, and "Poor" is over 300 milliseconds. You can read more on a technical level about this one here.

Why Are The Core Web Vitals Important?

The Core Web Vitals are important for two reasons.

First of all, they're a usability factor. Having better metrics in the Core Web Vitals report means that your site is more usable faster, and that means it's more accessible for users. A better overall user experience is what Google has been pushing on webmasters for a decade now, so it's no surprise they want to promote this.

In fact, Google has run their own studies about these metrics, and they found that having better Core Web Vitals means that users are 24% less likely to bounce. Who doesn't want a nice, easy way to tell what might be driving users away and fix it?

The second reason is that, in the future, Core Web Vitals are going to be the primary measurement you need to meet to be featured in Google's Top Stories readout. Right now, in order to be a Top Story, you need to use the AMP (Accelerated Mobile Pages) system.

Google has announced that, in the future, you will not need to be an AMP page to be featured in Top Stories. Instead, you will simply need to meet certain minimum standards in the Core Web Vitals.

It's a positive update overall, albeit one that encourages a lot of cynicism from SEOs and webmasters. AMP is a fixed system; you meet it or you don't, you have to do specific things for compatibility, and it puts you in a special subset of websites capable of reaching specialized feeds like Top Stories.

Right now, there are plenty of good sites out there that just don't want to do the buy-in for a Google system like AMP, so they haven't. Those sites would be great for Top Stories, but they're locked out. With this change, more sites will be able to reach Top Stories, sites won't need to contort themselves to meet AMP requirements, and (most importantly for Google) it's one less system Google has to maintain.

Now, at the time of writing, this change hasn't happened yet. Google has announced that it's going to happen eventually (most likely early 2021), and they plan to give webmasters a six-month notice before the change goes into effect. They've effectively announced an announcement.

Formerly, this announced announcement was going to be announced in 2021, but it has been pushed back due to COVID-19, so you probably have more time to adapt. For now, the Core Web Vitals report in the Search Console exists, and you can tweak your site to boost your compliance with those metrics.

This will enhance your user experience, and possibly have a beneficial effect on your overall SEO, but it won't dramatically open doors to you just yet. Later, though, more and more things will benefit from high Core Web Vitals, and by adjusting now, you'll be in a great position to take advantage of those benefits when they arrive.

And, let's be honest here, Google has no qualms about killing off services they formerly planned to make integral to SEO. Remember Authorship? AMP just becomes one more item on the list.

Besides all of that, it's not like you have much choice in the matter. Core Web Vitals are here now, and once they get more and more emphasis in the algorithm, you either adapt or you fall by the wayside. It's the same pressure as the push to have mobile/responsive design, the push to have thick, robust, unique content, and the push to minimize excessive ads. It's the New Normal.

Besides, it's about usability. Poor Core Web Vitals just means your site has usability concerns. Improving them means improving your user experience, and that's just plain good for everyone.

Monitoring Changes to Core Web Vitals

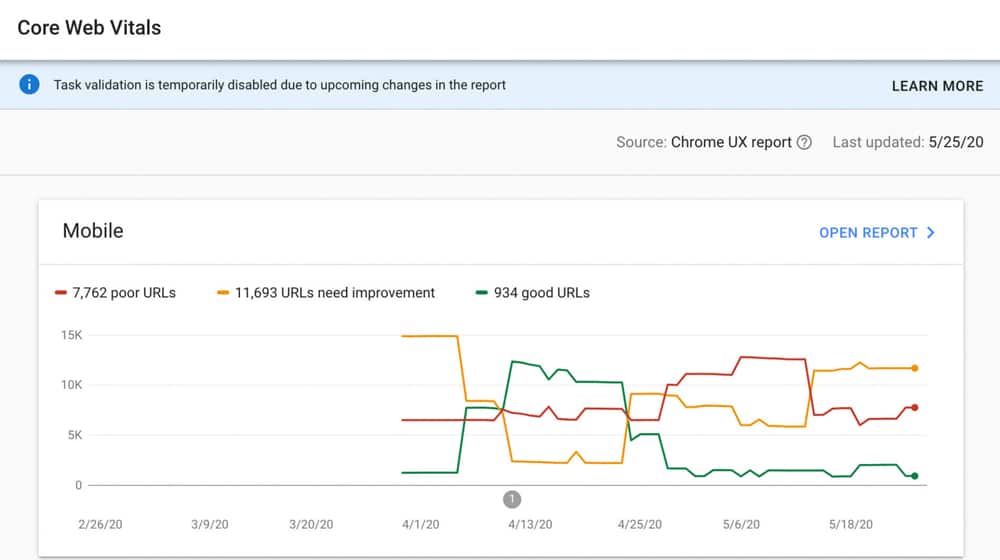

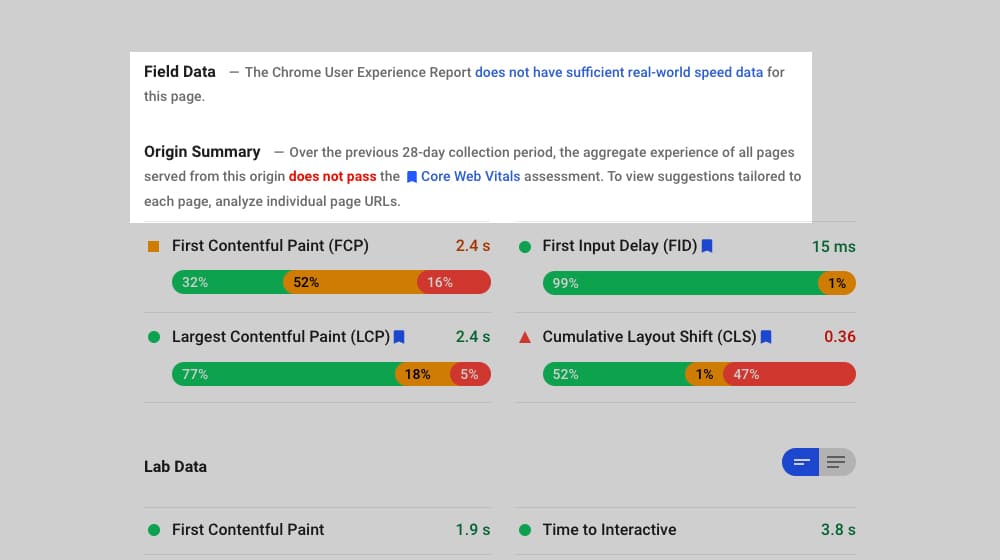

In the Google Search Console, you'll see, over on the left column under the Enhancements section, the Core Web Vitals report. This is also known as the CrUX Report, or the Chrome User Experience Report.

See, here's something a lot of people might not know about modern SEO. Years ago, SEO was measured by Google Search Crawlers, or spiders, navigating the web. Thousands of servers sent out hundreds of thousands if not millions of these little robots, crawling and indexing and processing the web.

There were a few problems with this. First and foremost, when you know the user agent of an entity crawling your site, you can adjust your site's design and appearance for that agent. You could show Google one thing and users another thing.

Secondly, Google's bots were not very robust compared to a full browser. They would, for example, render sites without JavaScript, in part to get around the previous issue and in part just to minimize the processing power necessary to run them.

This is fine, but it's only good for certain elements of a site. Page load speeds will be a lot faster if scripts don't exist, after all, so Google's bots wouldn't necessarily get an accurate picture of how a site worked.

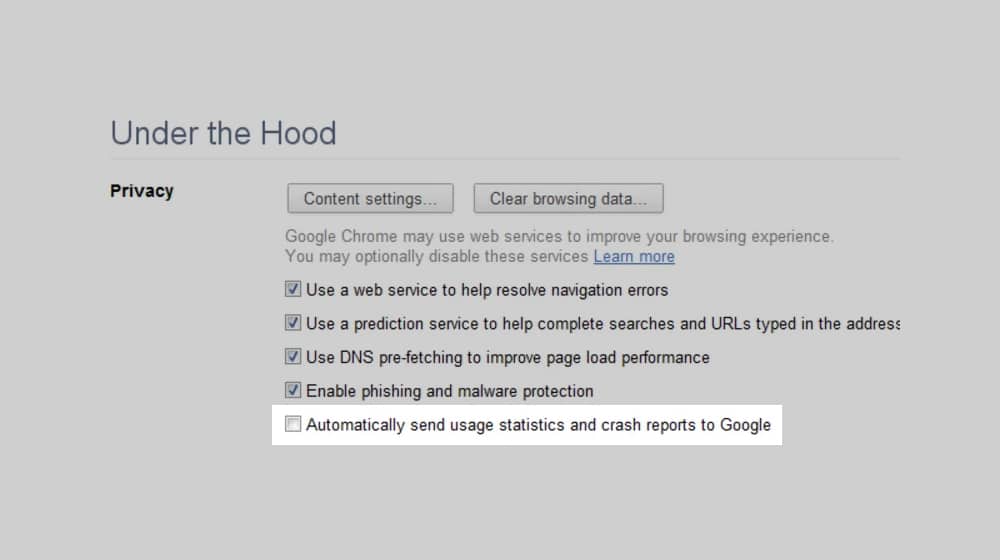

All that changed with Google released Chrome, and Chrome was picked up enough to become one of the biggest web browsers in the world. These days, Chrome is the default way Google harvests information about the web. Chrome users report data about websites to their home base.

This gives Google an absolutely astonishing wealth of data about a website, but it does lead to a few drawbacks.

First and foremost amongst those drawbacks is that smaller sites don't get very much data reported about them. If you only have a few hundred visitors a month, and only a portion of them are using Chrome, that's a very small sample size of data from which Google can draw conclusions.

That's why small sites when they visit the CrUX report will see "Not enough data for this device type" on both the mobile and desktop reports.

This is also the answer to the main question in the title of this post. Why does it take so long for my Core Web Vitals to update? Google has to harvest enough data to adjust the metrics, and the lower your traffic numbers, the less data they get, so the longer it takes to create a statistically relevant average.

Remember that Google is using real-world data for these metrics, not just data they harvest via a data center robot using the world's largest and fastest internet connection. Your data is coming from people living in silicon valley, people living in rural Idaho, and people living in the middle of Pakistan all visiting your site while using Chrome.

Google is also just using sampling; not everyone using Chrome who visits your site is reporting data. Can you imagine if 100% of the web browsing of 60% of the people in the world was reported back to Google's servers? They're powerful, but even they don't have the bandwidth or the processing power to handle all of that.

This does have a few drawbacks. For one thing, if Chrome itself updates, that can change how it renders sites, and that changes the metrics. Your Core Web Vitals can change without you changing anything at all. All you can do is strive to meet the minimums for Good on a technical level, and hope any variations are still minimal enough to keep you in Good.

The Fix Validation Process

When you're looking at your Core Web Vitals, you might examine your site and identify specific problems. You implement a fix, but when you check your Core Web Vitals report the next day or the next week, it hasn't updated. Did you do something wrong?

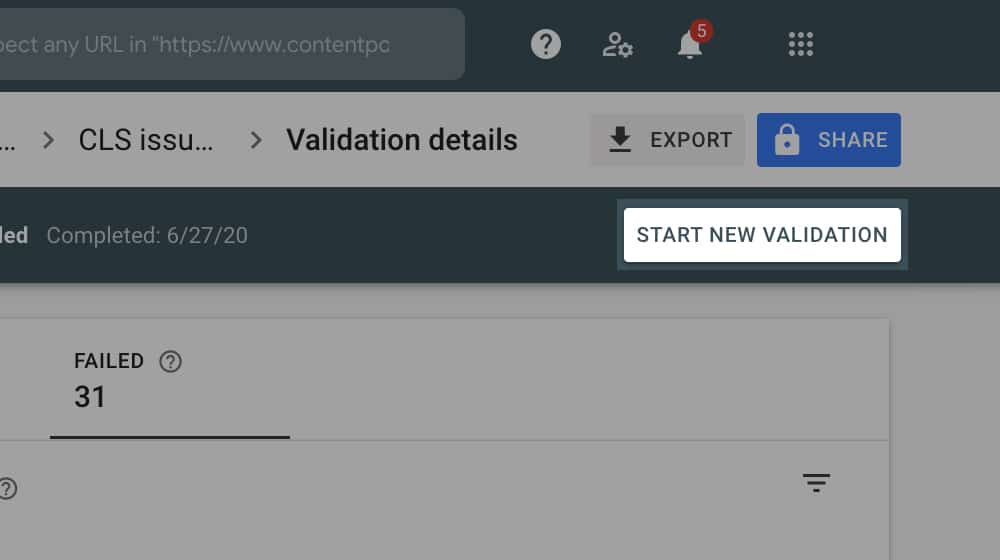

Google offers a validation process for exactly this purpose. When they identify a specific issue with one of the Core Web Vitals, the CrUX report will tell you what it is and give you links to ways to fix it. Once you fix it, you can validate that fix.

A "validation" is basically Google putting a flag on your account and telling Chrome to harvest data about you directly, rather than passively as a sample of the people visiting your site. This validation process is a 28-day monitoring session. Only once the verification period is up will your metrics update to tell you whether or not the issue is fixed.

You can initiate validation on a site-wide or URL-specific basis, but they both take nearly a full month to harvest enough data from enough sources to validate the issue. That's why it can take so long.

November 16, 2020

If in case you get a "Poor" in any of the areas, will this greatly affect SEO?

November 16, 2020

Hey Bob!

Google said they are postponing this algorithm update until some time in 2021.

If you have a slow-loading site, it could still affect your SEO since Google has been taking site speed seriously for a while now.

Google will also be judging your site based on how it loads and the user's experience starting in 2021, which is tied in pretty closely to your page speed.

You should certainly aim for a high score for SEO purposes. A fast-loading site is good user experience, and good user experience will result in higher rankings.

November 26, 2020

I tried this and got a Good rating except for FID which has a Needs Improvement mark. Do you have any suggestions on how can I make it better?

November 30, 2020

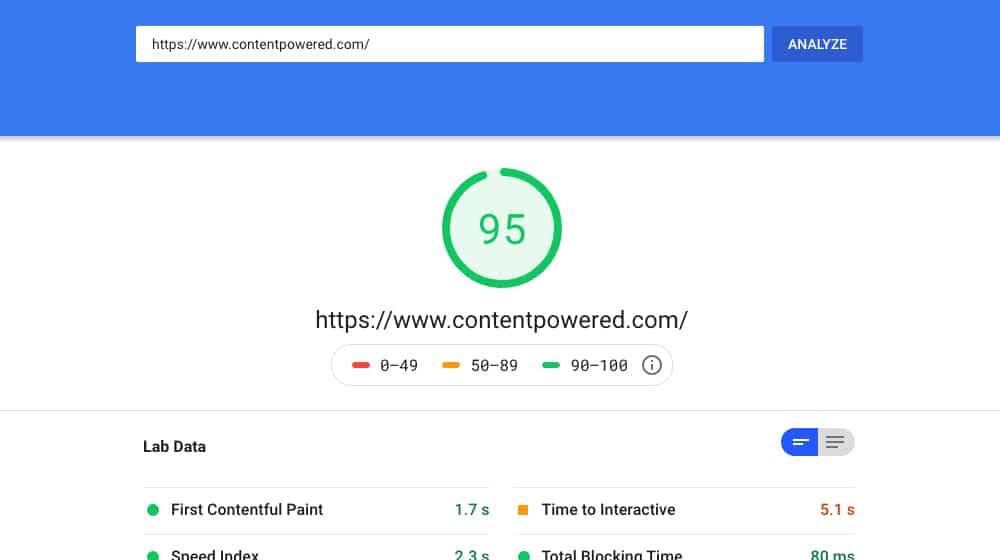

Hey Donna! Running the affected web pages through Google PageSpeed Insights will give you a breakdown of what is hurting your score.

If you're using WordPress, we really like WP Rocket for improving your score, combined with Imagify for optimizing your images.

Both are paid plugins and are very much worth it.

We use both on our site - you can run our site through PageSpeed Insights to see the score of our site.

December 17, 2020

Nice article. Do you have suggestions on what to do in case you have a poor rating on any of the items above?

December 18, 2020

Hi Michael!

I'm actually writing an article for SearchEngineWatch on this very subject, a large guide on how to improve your score.

The best way to start is to run your site through PageSpeed Insights to see what your site is having issues with specifically.

Make sure you're testing multiple pages and all pages reported as slow in Search Console.

It depends on your CMS too - optimizing a WordPress site is going to be much easier than Shopify, for example.

Run a check on your site and let me know what it tells you.

Feel free to drop me an email and I can point you in the right direction.

February 08, 2021

I tried this and wondering why in the lab Data my CLS is 0. Don't know what to do. Could you please help me figure it out?

February 11, 2021

Hi Stephanie!

CLS stands for Cumulative Layout Shift. This is bad for user experience since you don't want your web page shifting links and elements around as it loads.

It could result in people accidentally clicking the wrong thing.

A CLS of 0 is considered a perfect score.

In this case, I would do nothing! Everyone should strive for 0 CLS.

March 01, 2021

Hi James! Does it take effect already?

March 04, 2021

Hi Martina! I believe it was delayed until May 2021, though this could change.

May 31, 2021

Interesting. Some of my pages are rated high but most are rated as Needs Improvement. It's bouncing around like crazy.

June 04, 2021

Hey Patrick!

The smaller the website is, the longer it takes for Google to retrieve accurate data.

If your site scores high on Google PageSpeed Insights, I wouldn't pay much mind in the short term to the data in Google Search Console.

Once they've collected enough data over the next year or so, it should start to look closer to your actual page experience.

June 23, 2021

The coverage report shows that my site has 14.5K valid pages, but the search console only shows 250 pages in the core web vitals report. I'm not sure why the report has such a low number of pages as good when I have thousands of pages? I have 0 poor, and all my pages pass the core web vitals test when using the page speed insights test?

June 23, 2021

Hey Chris!

This is totally normal. It takes a while for Google to collect enough Lighthouse statistics from each of your pages.

They'll most likely update your popular pages first since those have more traffic (and, therefore, more data that they can collect).

It's best to focus on those 14.5k pages on your end and ensure that they score high on both desktop and mobile.

If they do, all you can do is wait for Google to catch up and collect enough data. This could take months or even years.

February 01, 2022

Nice and informative. Thanks, James.

February 04, 2022

Thanks, Luisa!

November 18, 2023

You were the 2nd search result. Nice job! Very informative article.

My website when tested says 100 score for performance and all others. I max a fix about 6 weeks ago bevause it was ~ 95 previously for CLS which made the Core Web Vitals Assessment marked as "failed" in red.

My question: can I still get a 'failed' mark for Core Web Vitals Assessment even if every test now says 100 on mobile and desktop? If there sometimes a delayed update?

thanks!!

November 18, 2023

Thanks, Hydn!

The delay you're seeing is the discrepancy between the real-world tests Google collects and the instant test that you ran.

The instant tests using their tool are helpful to tune your site, as you already have.

The real-world tests are data that is collected from Google's Chrome Browser. They wait until they have enough human visitors landing on your site, and they use that metric as their real-world test. I suspect that the reason they do this is because it's relatively easy to fool the PageSpeed Insights tool using browser fingerprinting, inspecting request headers, etc. But, it's very tough to fake thousands of real people, all logged into their Google account and using the Chrome browser, over a period of months. This is the data Google relies on.

It can take months for the real-world tests to update. Now, all you have to do is wait!

High-traffic sites update a lot quicker, and lower-traffic sites take longer to update - at least, in my experience.

I hope this helps - you should see your score update soon!