12 Reasons Why ChatGPT Won't Replace Your Content Writers

AI is sweeping the internet in art and writing, and the more creative content producers out there are starting to get worried. A little while ago, I did a deep dive review into Jasper, the business content AI promising to revolutionize blogging, which I found to be… decidedly unthreatening.

Jasper isn't the only AI on the block, though. Microsoft has been working on an AI-powered Bing service, Google just released their Bard AI (to disastrous effect), and ChatGPT is the subject of a million threads on Reddit, Twitter, and elsewhere.

ChatGPT is the big one I'm looking into today, but I can already tell you one thing. It's excellent at some things, mediocre or terrible at others, and limited from even touching some more challenging subjects entirely. If you're worried about it taking over the world of web writing, you can set your fears aside.

It's also being exploited in negative ways already, which doesn't bode well, but that's a story for another time.

A Closer Look at ChatGPT

Before getting into the specific reasons why ChatGPT will not replace your content writers, let's first take a deeper look at what it is, what it's designed to do, and what it isn't.

On the surface, it's all very impressive. It's also not limited to pure AI writing and dialogue; it can produce, review, debug code, and more.

ChatGPT is initially free as a "research preview," which they're using to gather feedback, further refine the training set, and watch for exploits they can block. A big one is how there seems to be a legion of people on Reddit and 4Chan trying desperately to get the AI to use racial slurs, including creating insanely convolutely scenarios to try to trick it into moral conundrums, as if it's not just a mathematical structure analyzing language with human touches tacked on.

They've also introduced ChatGPT Plus, which costs $20 per month and gives you access even when the ChatGPT servers are under high load. I'm using the free version because I'm on the waitlist for the paid version to write a review of that next, and I likely won't be getting access anytime soon.

I encourage you to sign up and play around with it. Even if I'm cynical and biased against AI, it's still a fun novelty toy that can generate a lot of interesting content, even if it's inappropriate for content marketing or blogging on a business website.

A Dozen Reasons ChatGPT Won't Replace Your Content Writers

ChatGPT has its challenges. On their blog, one of them is front and center as a warning when you sign up and throughout your usage. So, let's start there.

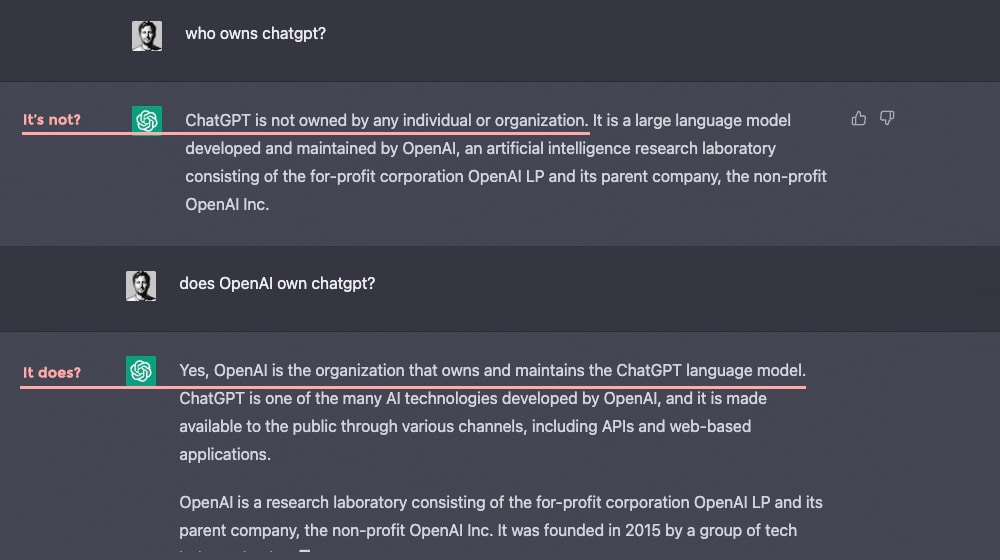

1. It Has No Factual Accuracy

Read the link above about Google's Bard AI. You can see how they debuted it with a bold public demonstration, where it immediately and confidently stated a "fact" that wasn't true.

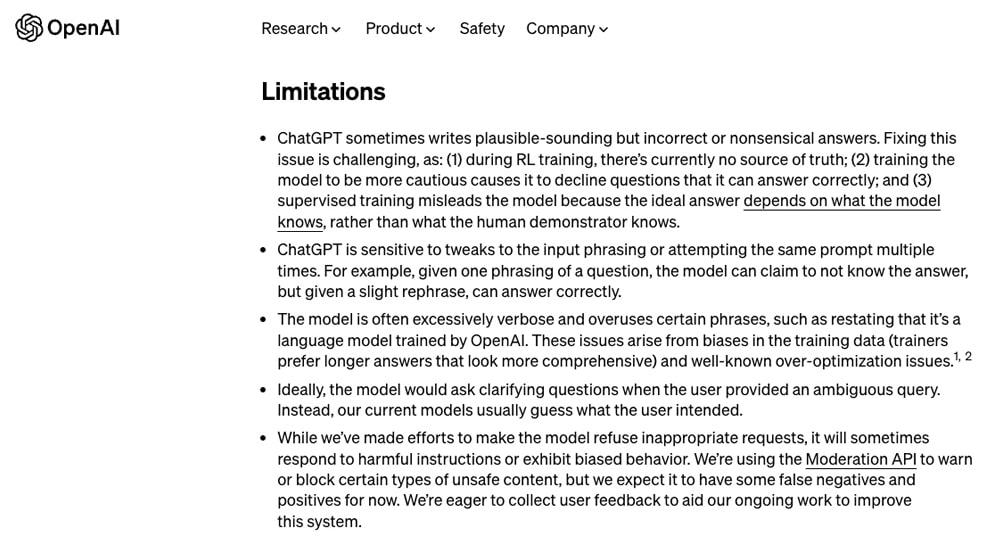

ChatGPT, Jasper, and every other language AI have the same problem. In fact, ChatGPT has a warning about it on its blog homepage.

"ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging, as: (1) during RL training, there's currently no source of truth; (2) training the model to be more cautious causes it to decline questions that it can answer correctly; and (3) supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows."

In other words, it has no way to verify a fact; this is an inherent flaw in how AI is created. It is a very complex mathematical relationship between words and phrases. It has no outside frame of reference, so it can say things that are incorrect with absolute confidence because to it, saying the moon is made of paper and the sky is blue are the same kind of statement.

Do you want to take that risk when your brand is on the line? Google did, and they lost an immense amount of money and were embarrassed because of it.

2. It Can Be Offensive

Another warning that ChatGPT gives you off the bat is relating to those people who are trying very hard to get the AI to say racial slurs.

They say:

"While we have safeguards in place, the system may occasionally generate incorrect or misleading information and produce offensive or biased content. It is not intended to give advice."

They have a similar warning on their homepage.

"While we've made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behavior. We're using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now. We're eager to collect user feedback to aid our ongoing work to improve this system."

Now, obviously you aren't going to try and get it to do something like that for your content marketing. But there's always the chance that something slips through, so you would, at the bare minimum, need a sensitivity editor to read through anything the AI produces to make sure it's not offensive in some way.

3. It Falters in Long-Form Content

Another problem generative AI has for content is that the baseline for good online content is still much larger and longer than what these AI systems can produce. They can't produce content at any sufficient length. I mentioned this in my Jasper review, and it's also here with ChatGPT.

In a research study I put together a few years ago, I analyzed nearly 30,000 blog posts ranking highly in Google search, looking specifically for how long they were. It showed two primary takeaways. First, the average word count for high-ranking blog posts in 2019 was around 1,200 words. The second is that that average was rising over the previous handful of years.

I always aim for at least 2,000 words and often go over it.

Jasper produced a similar report just a few weeks ago, and their conclusion is an extension of that increasing trend: the best blog posts are between 1,500 and 2,500 words long.

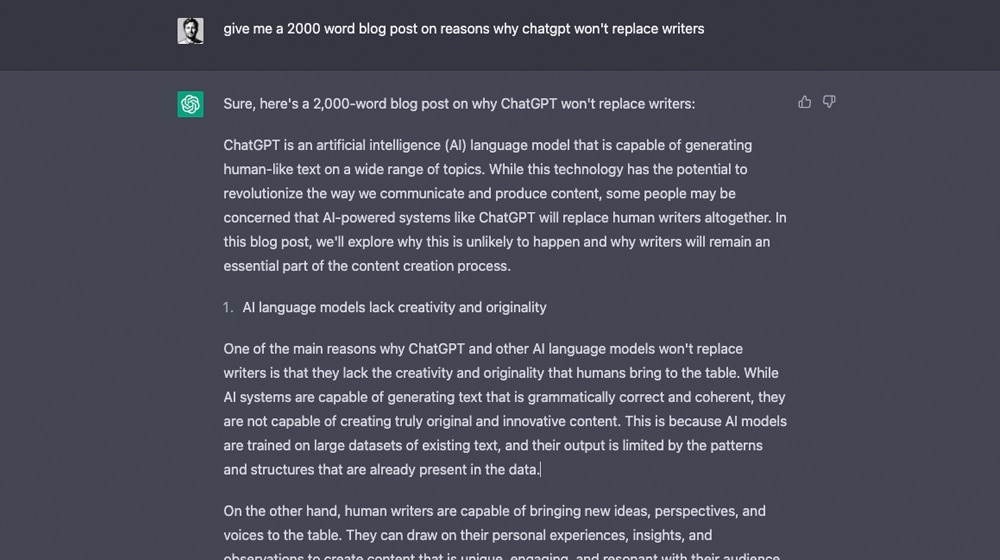

These AI systems seem to cap out at around 600 words at a time. I asked ChatGPT to produce a 2,000-word blog post, and they gave only 640 words. Not nearly sufficient for my purposes! It could be a basic outline, but that's a lot of fluffing up to do to double or triple the length of the post.

With competing AI systems, you can technically write a 2,000-word post, but that involves creating an article with many sub-headings and 200-300 word chunks. The more AI writes, the more you have to carefully read it to make sure it's staying on topic, not contradicting or repeating itself, and working toward a logical conclusion.

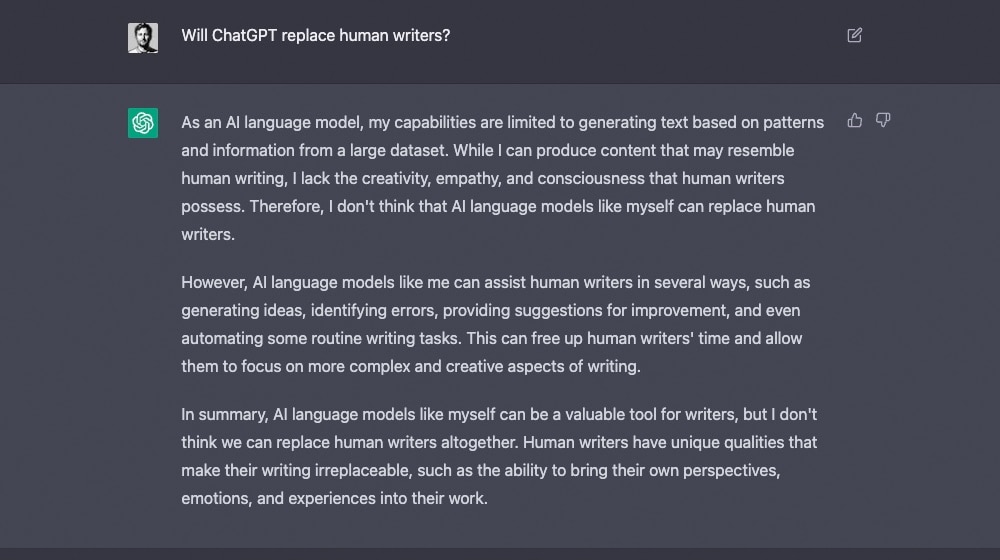

4. AI Systems Lack True Storytelling

Here's a fun one. I asked ChatGPT why AI won't take over content marketing. In its list, it gave me this.

"AI is not capable of creating compelling and engaging content that resonates with consumers. Content marketing is more than just delivering information to consumers; it's about telling a story that resonates with them emotionally and connects with them on a deeper level.

This is where human creativity and emotional intelligence come into play. Content marketing is a human-centered discipline that requires a deep understanding of consumer psychology and behavior. Only a human can understand and empathize with the emotions and desires of their target audience. This emotional connection is what sets content marketing apart from other forms of marketing and is what makes it so effective. AI algorithms, no matter how advanced they are, simply cannot replicate the emotional intelligence and empathy that humans possess."

One of the critical elements of modern content marketing is engaging narratives. You're not just stating facts, giving surface-level reports, or providing basic tutorials. You're bringing a narrative to the table. You're building emotional resonance with your audience. Even this blog post you're reading right now is about me easing your fears, not just a list of reasons.

5. Training an AI is Time-Consuming

There's a reason there are only a few big AI systems, and they're all produced by significant groups like the OpenAI Foundation, Microsoft, and Google. OpenAI uses Microsoft's Azure supercomputer clusters to do its work.

Training an AI is a long-term process involving the deep, iterative analysis and repetition of reward systems that, even with massive datacenters full of supercomputers, takes years to "complete" and are never truly done.

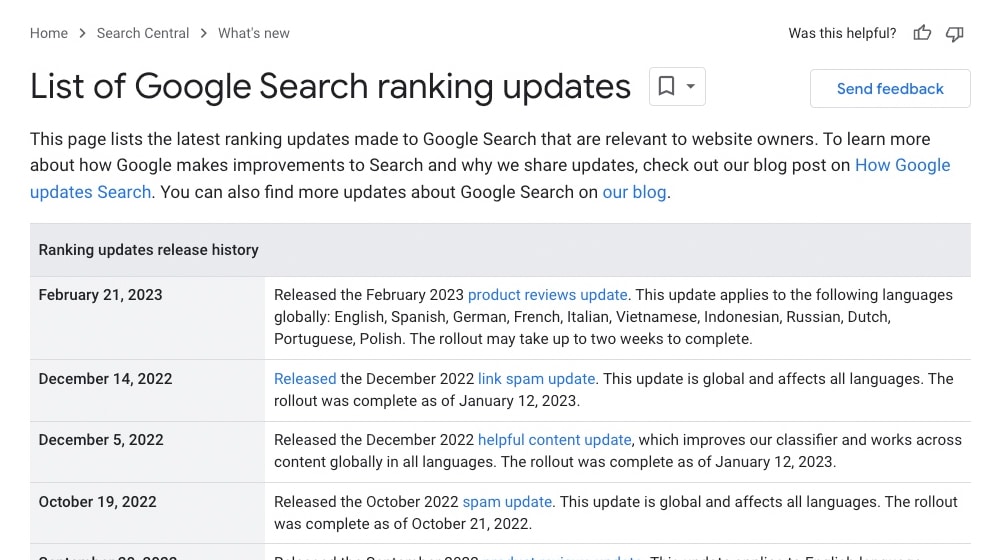

Why is this important? Let me ask you a related question: how often does SEO change?

Answer: every few weeks, at least. Minor changes are happening all the time, whether it's to the algorithm directly, the trends in content consumption, or flash-in-the-pan viral hits. To properly capitalize on anything, you need to be agile and adaptable. The AI? It's not.

6. Training Needs a Data Set

Speaking of data sets, you need a data set for an AI to produce content. AI can generate content, but all of the content it generates is essentially a remix of what it has in its data set. It can't synthesize new facts using logic and the information it knows because that's not how it thinks. There's no critical thinking or original thought in an AI system.

That means, sooner or later, you still need humans to produce content that the AI could adapt. You can think of it as a very advanced form of article spinning.

However, when you put it like that, the internet is already like that, isn't it?

We didn't need AI for the bulk of our content to be remixed and rehashed garbage.

7. AI Only Replaces the Bottom Tier of Content

Will AI steal your job? Well, maybe, but not in the way you might think.

AI can produce basic content and content that isn't truly unique, even if it passes Copyscape and other checks. For blogs where 100% of their content is just a rewrite of what the big names in the industry are doing, that's probably sufficient! But those businesses aren't going to take up top spots in their industries on the backs of nothing original or new.

If I were to start using ChatGPT to handle my writing process for me, I would lose all my clients when they saw the immediate, stark drop in quality.

There would be no more unique perspectives.

Notice how many times I say "I" in this article. These are my thoughts, not generalized information from a database.

8. Generated Content will Start to Look The Same

Another one of the warnings on the front page of ChatGPT is this:

"The model is often excessively verbose and overuses certain phrases, such as restating that it's a language model trained by OpenAI. These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimization issues."

Because of the way language AI is trained, certain kinds of phrases are repeated frequently, particularly when you generate a lot of content on the same subjects. These can be general phrases, like stating that it's an AI generating the content, but they can also be phrases related to the subject. It can be throughout a single piece or across many pieces.

Sounds like keywords, right?

Well, what happens when you over-use keywords in your content?

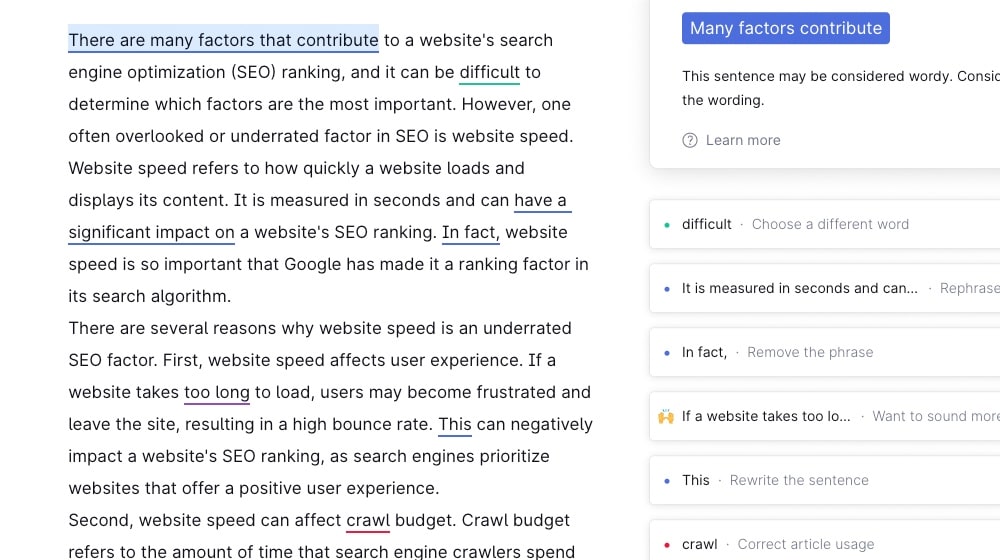

9. It's Rarely Completely Smooth

Another issue that crops up in ChatGPT and other AI (though I noticed it more in Jasper than in ChatGPT) is grammatical issues. Whether you're using a set of focus keywords or just letting the AI generate what it can, you're going to run into sentences that don't quite work, misused words, and other problems.

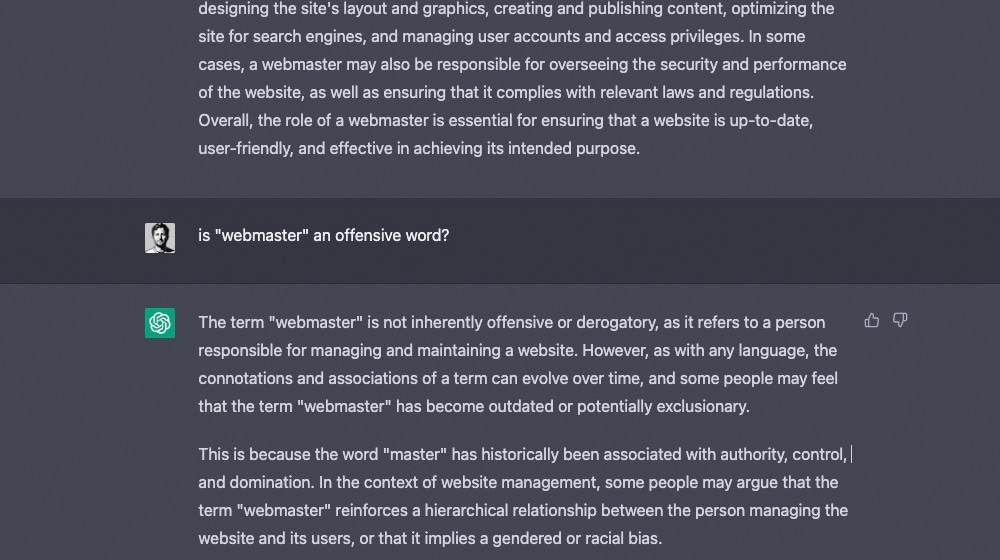

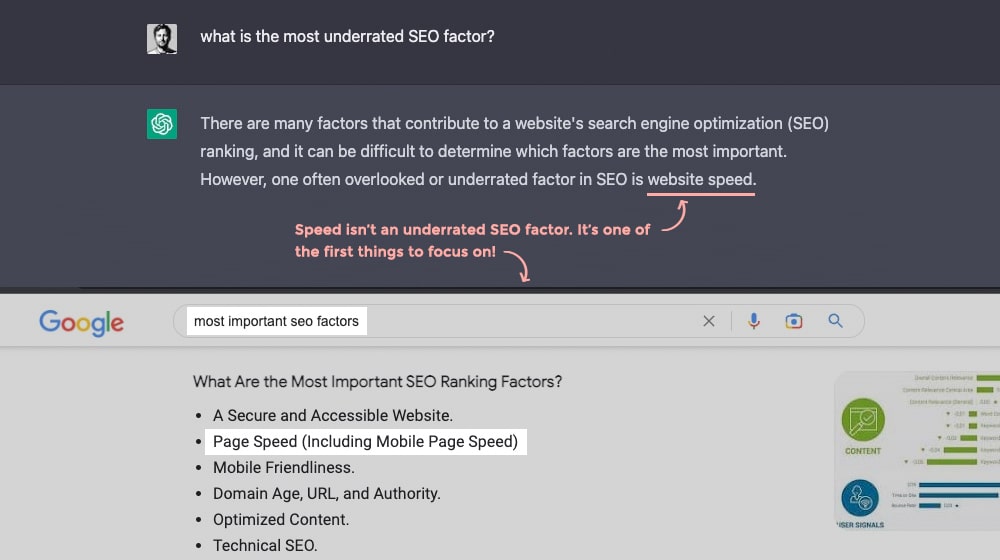

10. Complex Topics Can Be Misinterpreted

In my Jasper example, I had the AI generate a blog post about submitting blog posts to other sites, a topic I had just recently covered myself.

English is a vague language with many words with identical spellings but very different meanings depending on the context or words that you can use in different ways. A sufficiently powerful AI can learn many of these, but it's not necessarily going to be accurate in picking which one to use at any given time. So, you can run into a lot of similar issues.

I don't have a specific example of this from ChatGPT, but that's because when I tried to use similar prompts to get it to generate something I could use as an example of success or failure, I just started getting errors.

"An error occurred. If this issue persists please contact us through our help center at help.openai.com."

What does this mean? I'm so tired of services that refuse to give reasonable errors. T

hough that's a personal peeve, so I guess don't take it as too strict a piece of criticism.

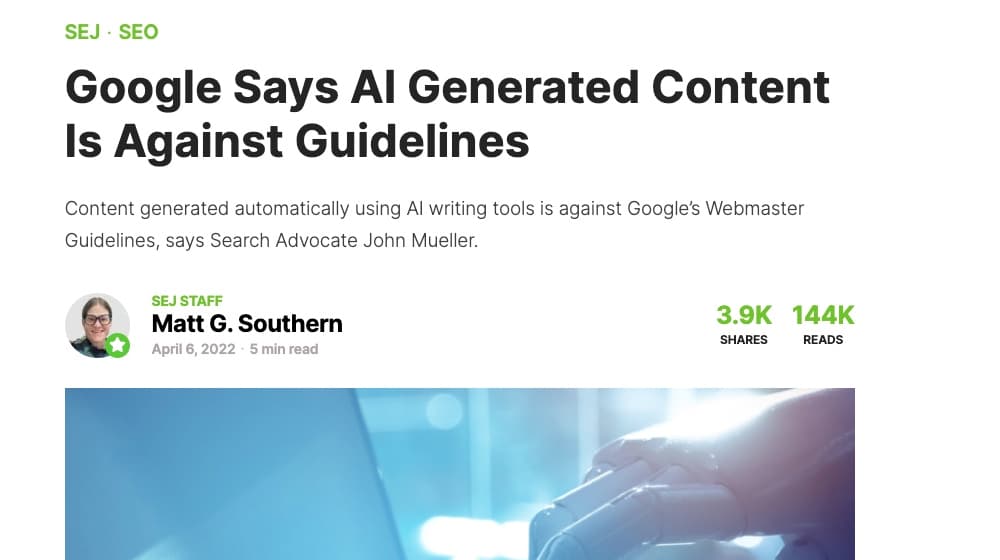

11. Google's Policies Prohibit Spammy and Manipulative Content

Google has a bit of a crisis of identity going on right now.

- On the one hand, in the past year they said that AI-generated content constitutes "automatically generated content," which is against their webmaster guidelines.

- On the other hand, they recently released their own content generation AI, and they quietly altered their guidelines to make it vaguely more acceptable to use.

Which is it?

Both, honestly. Google has never been against the use of writing tools to assist with creating content. Otherwise, they'd yell at anyone using keyword research tools or things like Grammarly, right? They just don't want you to use AI to do all the work for you. The biggest issue isn't using AI to generate content; it's using AI to game the search results.

Well, what do you think you're doing when you give ChatGPT a prompt and just uncritically publish what it gives you?

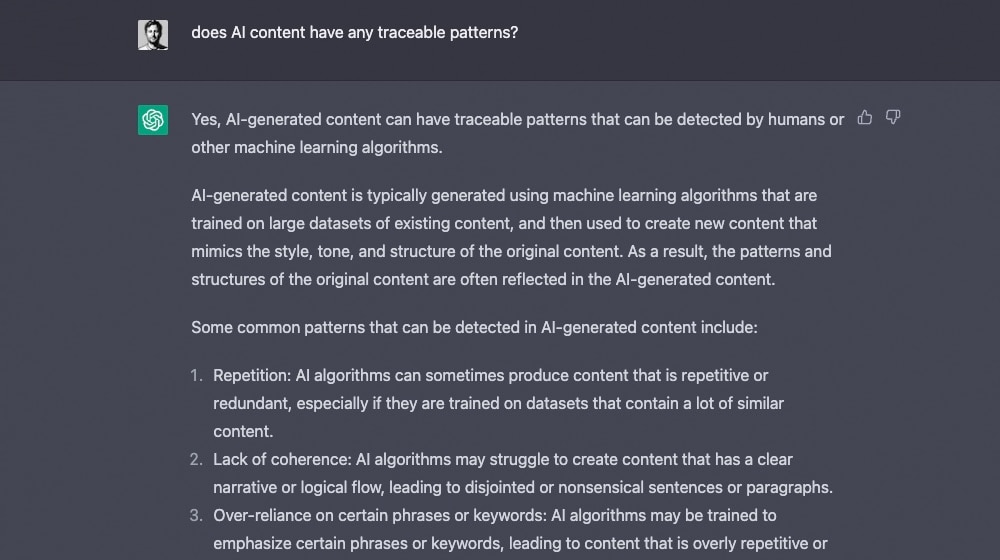

12. Google is Investing in AI Detection Tools

Everyone is aware that AI poses a threat to a whole host of industries. Universities and schools are fighting students looking to use AI to do their homework. Google is certainly looking for ways to detect AI-generated content to separate it from good content. ChatGPT released its own detection tool to help in this pursuit.

Here's what ChatGPT said when I asked it if it has any traceable patterns:

If you're using AI content as a core part of your content strategy, this should make you nervous.

Will ChatGPT Be Useful to Content Marketers?

Sure! Like any tool, it can have a role if you use it as a tool rather than a replacement.

ChatGPT can't write good, unique, insightful blog posts from whole cloth. An artificial intelligence system may be able to do that in another five or ten years, but right now, we're not there yet.

What AI tools like ChatGPT can do is streamline some of the more tedious processes you do, like:

- Generate topic ideas. ChatGPT can give you many ideas for blog posts based on a prompt, and while most of them aren't going to be terribly unique or exciting, they might hit a few bases you've missed in your own research.

- Headline generation. ChatGPT can generate blog post titles (meta descriptions, image alt text, and other short-form content) for you. You still have to pick and refine the right one, but it can get you 80% of the way there.

That's really what it all comes back to. ChatGPT, as it currently stands, can be a helpful tool for some of the tertiary drudge work of content marketing. It's the same way using computers and apps to automate web scraping and keyword research took away the jobs of people who did that all day long, which is another example of how APIs took away the jobs of data entry clerks and how calculators made the abacus obsolete.

Use it as an assistant, a tool, and not a replacement for the human writers that make your business thrive.

Even if your goal is to use AI to replace your writers, you'll be disappointed with the results in the end.

Comments