Tutorial: How to Force Google to Reindex Your Site

Google's indexing is a mysterious process. It used to be that they had a legion of software "spiders," small bots that they sent out throughout the internet at large to travel from page to page and site to site, copying what they saw. In the years since their tech had gotten more sophisticated, and at this point, they use a variety of data sources (including Chrome users) to index the internet.

Being indexed by Google is the first step in growing a website. I can foresee three groups of people likely concerned with Google's indexing process.

- Newcomers who have set up a new site and want Google to list their new webpages will be more anxious to get full index coverage.

- Site owners who have decided to shift gears will want Google to see those changes as soon as possible, notably if they changed large swaths of content and need a new indexing pass.

- Owners of older sites full of spam, black hat SEO, thin content, and other problems will want a rapid reindexing. After cleaning up that spam, they'll wish to receive a new review so that they can be indexed again.

Luckily, no matter the reason, the method is always the same.

So, how can you achieve Google reindexing and get the search giant algorithm to recrawl your site once again?

30 Second Summary

30 Second Summary

You need Google to check and list your website pages in their search results. First, you'll want to submit your sitemap with Google Search Console and use the URL Inspection tool to check individual pages. You should also make sure your robots.txt file isn't blocking Google and that you don't have any "noindex" commands on pages you want listed. You can speed things up by posting regularly on your site, sharing content on social media, and building quality backlinks - but remember that Google indexing can still take days or weeks no matter what you do.

Google's Official Recommendation

Google recently updated their documentation article on recrawling URLs a few months ago (July 2023). However, their guidance remains mostly the same:

If you've recently modified a page on your site, you can ask Google to re-index it using the URL Inspection tool or by submitting your sitemap.

While the URL Inspection tool is suitable for a few individual URLs, a sitemap is recommended to submit all of your URLs to Google at once, especially after significant site updates. However, remember that requesting a crawl doesn't guarantee immediate or any inclusion in search results, and crawling might take days to weeks. Furthermore, repeatedly requesting a re-crawl for the same URL doesn't expedite the process.

In that same vein, I haven't found that deleting and re-submitting the sitemap has any sort of effect and doesn't appear to speed up or encourage faster indexing.

Also, it might interest you to know that sitemap variables like "changefreq" and "priority" are ignored by Google. I wrote a seperate article about this here:

Is there anything else you can do beyond Google's advice? Well, let's talk about it.

Recognize the Limitations

The first thing to do is recognize the limitations of crawlers. As Google says,

"Indexing can take anywhere from a few days to a few weeks."

It doesn't matter if you're a brand new website or a more significant site; Google is Google. We're all ants to them.

A website rarely gains so much attention that it quickly "jumps the queue." At the same time, while there are various methods to get Google's attention and get them indexing your content, they all have more or less the exact response times. You can try different approaches, but they'll all work about the same. Your mileage may vary, of course, but on average across all site owners, it evens out.

Google also doesn't necessarily make it clear to a site owner whether or not their site has been indexed. Or, instead, they do, but you need to know where to look. And no, "site:example.com" searches are not the only way to check.

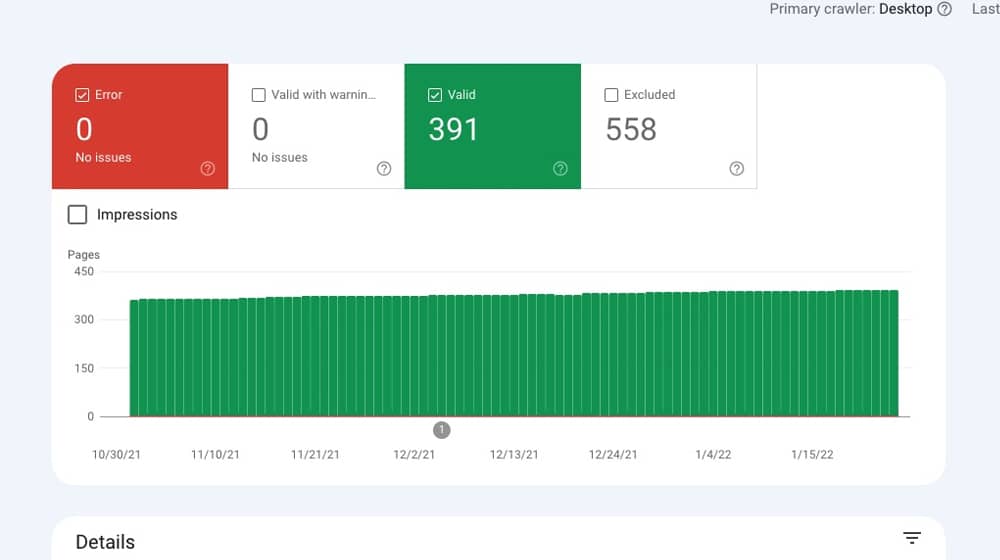

Google provides webmasters with a tool called the Index Coverage Report. If you know how indexing works and how search works as a whole, it's a handy tool.

If you don't have much idea beyond "is/isn't in the Google search results I see," you'll want to do a bit of further reading first. It will let you know when Google crawls your page, which pages they indexed, and which pages haven't been indexed yet.

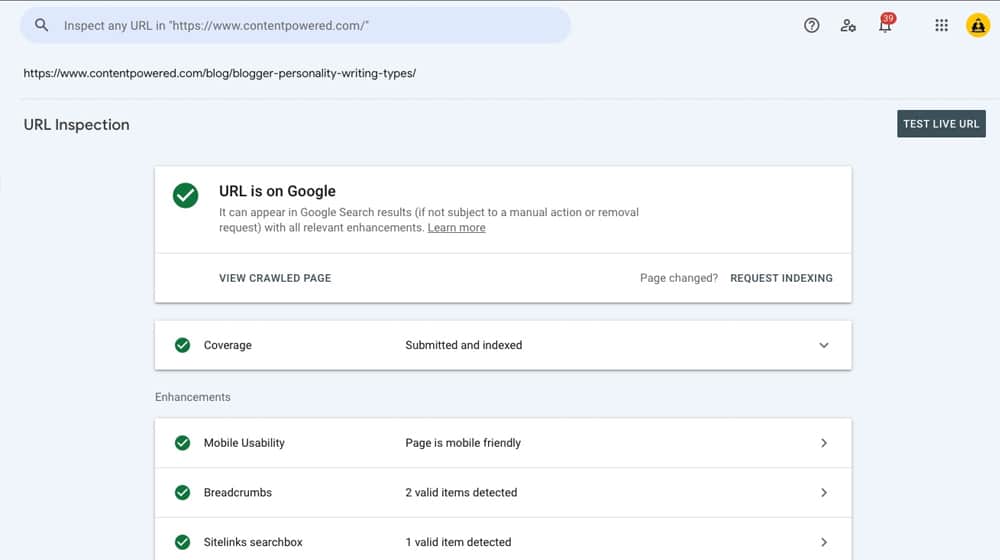

The Index Coverage Report is a full-site indexation health check. If you want to check if a single page is indexed, you can use the URL Inspection Tool instead.

Troubleshoot Indexation Problems

The first thing you'll want to do is check if there's anything on your site that would prevent Google from indexing your site. Generally, there are only a handful of such roadblocks.

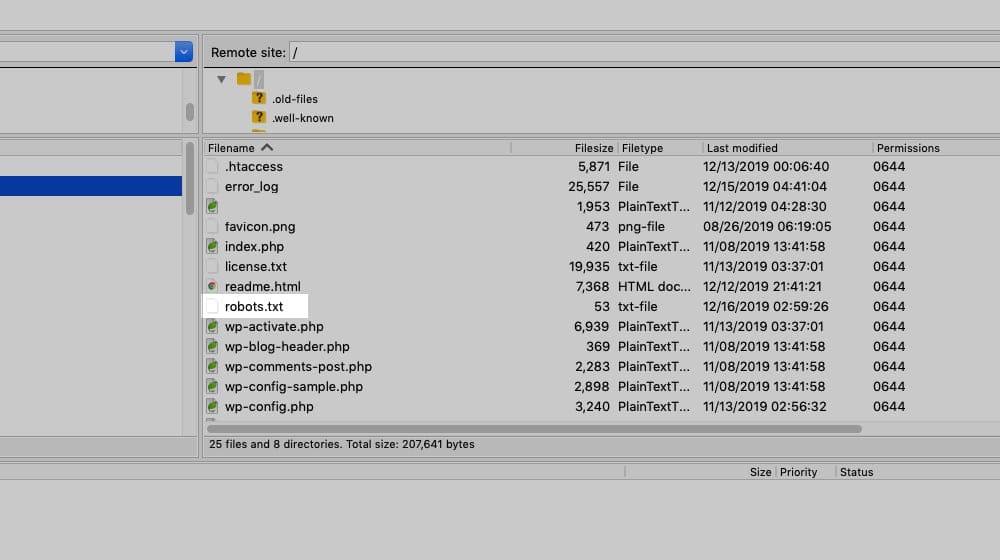

1. Check for a Robots.txt block.

The most common indexation error you're likely to encounter is a problem with your robots.txt file. Robots.txt is a text file on your server, usually in your site's root directory, that gives instructions to specific bots that might crawl your site. You can use it to tell the bots which pages you don't want them to see; this includes Google's bots, among others.

Why would you want to use this? Well:

- Maybe you're split-testing variations of landing pages and don't want the near-duplicate content indexed.

- Perhaps you're hiding the site temporarily while you revamp it or build it from scratch.

- If you're trying to hide WordPress system pages or thin content, like attachment pages, you can use noindex to exclude those in your HTML.

Usually, it's that second one that gets people—that, or just a malformed file that accidentally hides more directories than it should.

Either way, you can check your robots.txt manually (by opening the file and reading what's inside) or using Google's tools. They have a robots.txt tester, found here.

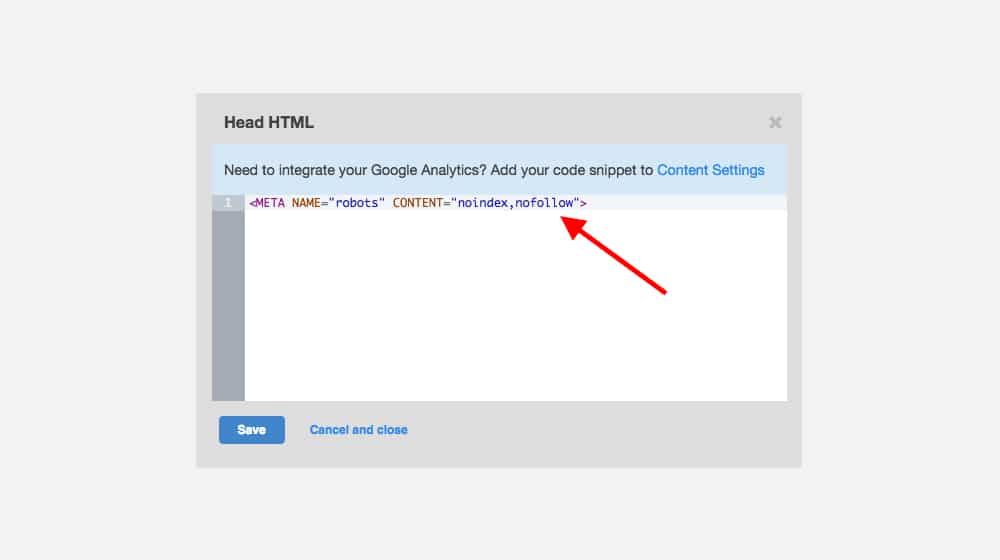

2. Check for page-level noindex commands.

Noindex is a directive, primarily for Google, that website owners can apply at the page level or the domain name level. You can use it on WordPress attachment pages to ensure that they don't appear as thin content and disrupt your SEO value.

Unfortunately, it's easy to apply them to the wrong pages accidentally and drop your whole site from search. Here's a rundown of the problem and how to check for and fix it.

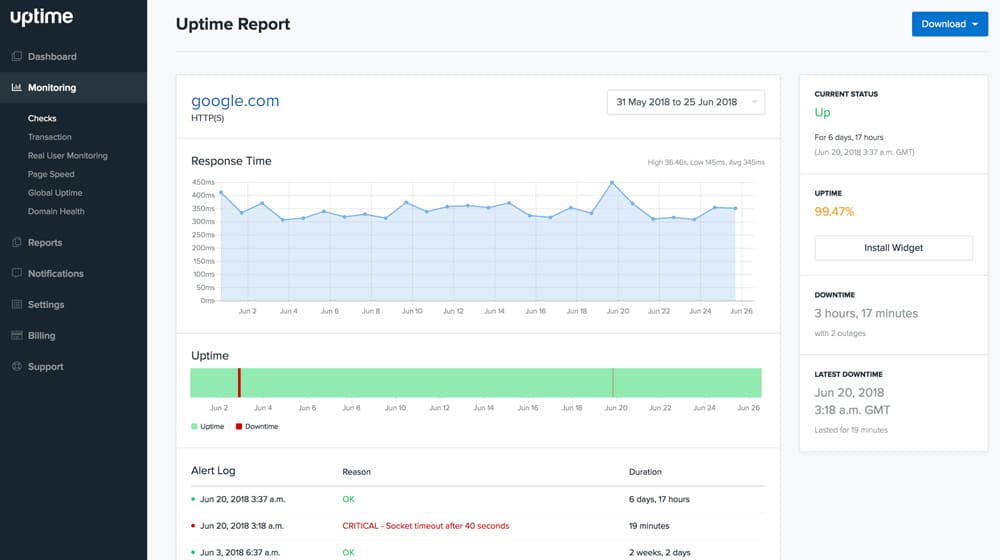

3. Check for sporadic downtime and other server errors.

One of the other reasons your site may have indexation issues is, well, coincidence. If your web host keeps dropping service, and they happen to be down when Google's spider comes by, all Google is going to see is a missing website. They can't crawl what, to them, doesn't exist.

This downtime can be tricky to diagnose and optimize because if your site is up when you check, how can you tell when it's down? The solution is to sign up for an uptime monitor. Luckily, Google's site inspection tool can tell you if server errors are responsible for your indexation issues.

4. Check for canonicalization issues.

Another issue, if you're specifically looking at an individual page to see if it's indexed, is that it's not, but another version of it is. Suppose you have two copies of a page (for minor split testing, URL-parameter variations, or site search results). In that case, you'll want only the main version of the page to be indexed to avoid duplicate content penalties.

The official way to do this is to use the rel=" canonical" attribute to tell Google which version is the "official" page. If you're checking a page that should be official and isn't, you may have implemented the canonical tags incorrectly, and they'll be identifying a different page as the canonical version.

There are many other related issues as well, so check Google's indexation report and see what it says. Their resource page also has a complete list of the errors they can report and what they mean.

The Official Means of Indexing Content

There are two "official" ways to get Google to index your site. If you've been following along and clicking the internal links I've added, you probably already know what they are; if not, well, here you go.

The two options are for individual links and bulk sites. So, if most of your site is indexed, but a few essential, new, or important pages seem to be missing, use the first option. If you've renovated most of your site, if your site is new, or if you want a complete indexation pass, use the second option.

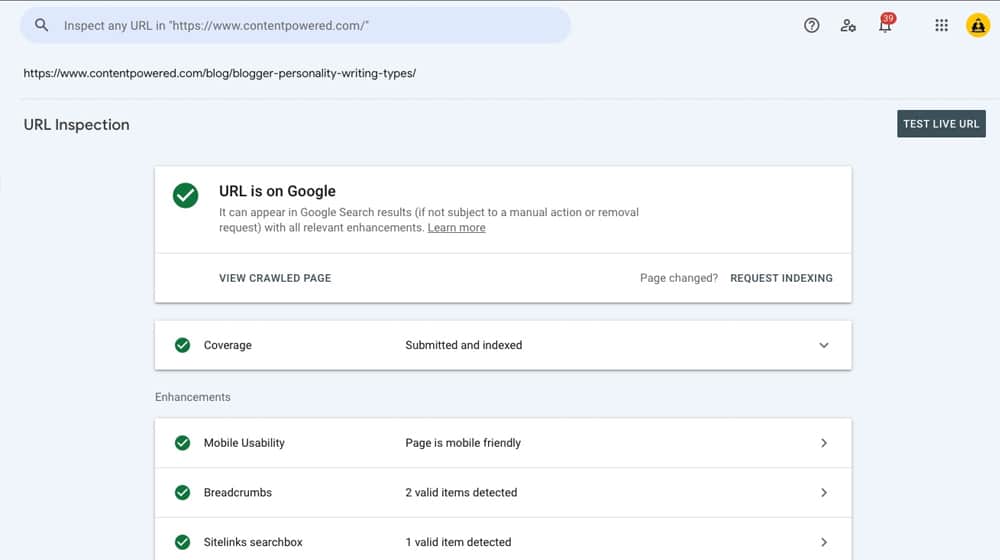

1. Use the URL inspection tool in Google Search Console.

The URL inspection tool is part of the Google Search Console (formerly Google Webmaster Tools), and you can find it here.

It's a tool that gives Google a URL and says, "Hey, check this out and tell me what you see, okay?" They will, and they'll generate a report about the URL that includes things like the server status, indexation status, robots.txt and URL directives, and more.

Once you select the inspection tool from the search bar and request an inspection of a URL, you have a button that says "Request indexing." This step is how you "submit" a single page to Google. Since there's no other official URL submission process, this is mostly the best you get for a single page.

You can use this tool for more than one URL, but there's a cap on how many you can inspect before Google cuts you off for the day. Unfortunately, Google doesn't state what that number is. If you reach it, you'll only know because the inspector will throw an error that you've exceeded your daily quota.

The URL inspection lets you fetch as Google's crawler, and when Google does that, they do an indexation pass while they're there. Unless, of course, a robots.txt, server error, or other directive tells them not to.

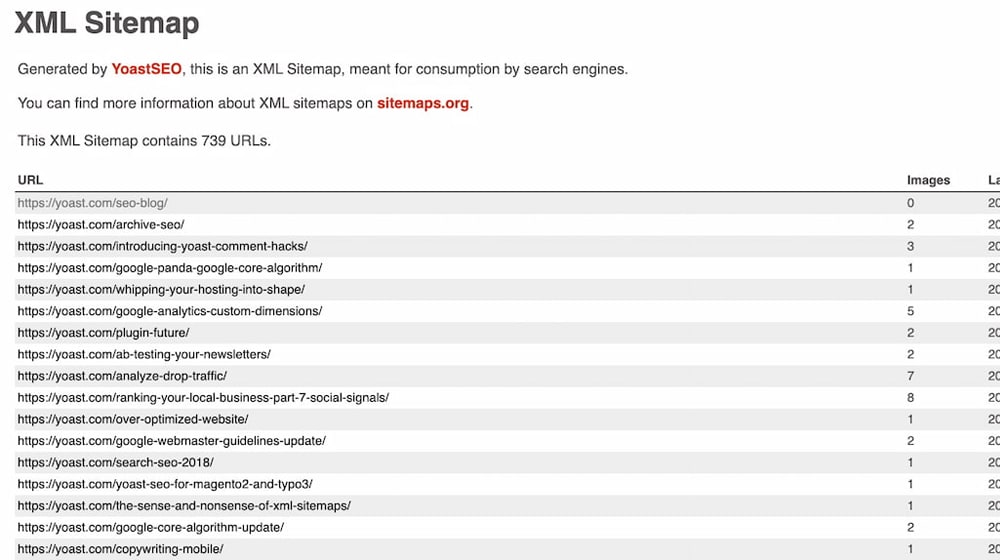

2. Submit a sitemap.

If you want to tell Google to index more pages than the URL inspector quota, or you want to ask them to index your whole site, submitting a sitemap is the best way to go. A sitemap is just an XML spreadsheet that says, "here's a list of all the pages on my site, check them out," and Google will, eventually, oblige. As mentioned above, it can take anywhere from a couple of days to a couple of weeks for them to get around to it.

Google supports Text, Atom, RSS, and XML sitemaps, but XML is by far the best. It has the most data, is the easiest to generate, and is often made by CMSs automatically. A sitemap can be up to 50Mb and 50,000 links, so all but the most extensive websites can submit them. Even then, those massive sites can often generate section-specific sitemaps and offer those individually (with a sitemap index, which is a collection of sitemaps.) If you have an SEO plugin on a WordPress site, such as Yoast SEO, then your sitemap will be generated automatically.

There are technically three different ways to submit your sitemap to Google.

- Add it to robots.txt. All you need is a line that says "Sitemap: http://example.com/sitemap.xml," and the next time Google looks at your robots.txt, they'll find the sitemap.

- Ping it. Google accepts some limited ping requests using the GET request. Just visit https://www.google.com/ping?sitemap=FULL_URL_OF_SITEMAP, and they'll take it.

- The sitemaps report allows you to submit your sitemap directly. Submit it via the Google Search Console.

The third one, via the search console, is generally the best. Every process takes about the same time to process anyway, so you might as well use the one that has a history and feedback built into it, right?

As always, the Search Console is full of every tool you could want as a site owner. It also lets you monitor the website traffic that you're generating from Google's SERPs, monitor the performance of your new pages, and dig into the impressions, click-through rate, and organic traffic for specific pages.

Those are the two "official" ways to submit a sitemap to Google. What about unofficial ways?

Unofficial Means to Google Reindexing

As with anything as fundamental to SEO and as poorly documented as indexation is/used to be, there are dozens of "hacks" available online from SEO pros to help you speed up or force indexation by circumventing the usual process. Here are the ones that work the best.

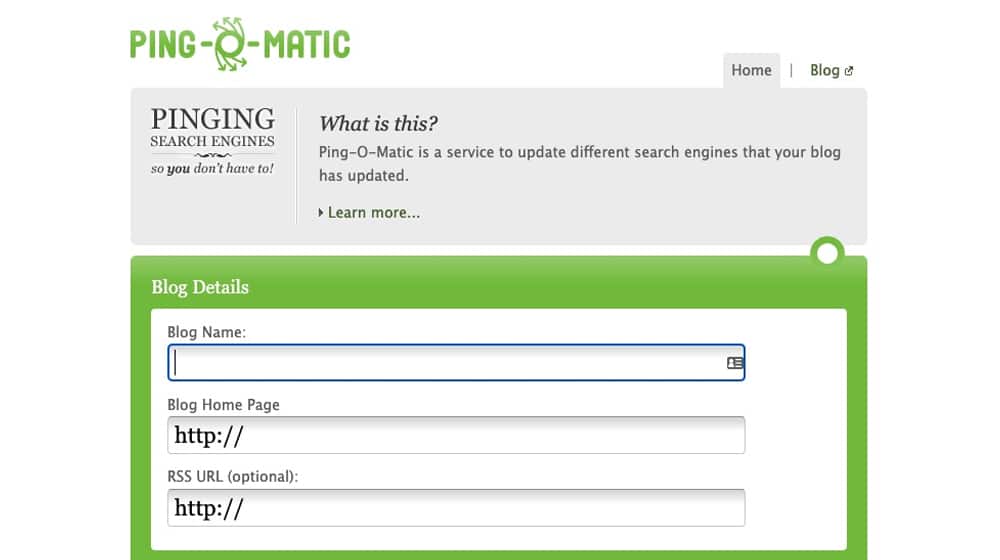

1. Use a ping service.

The first option is to use a third-party ping service. A service like Ping-O-Matic, for example, uses the ping process from a handful of different services (such as Blo.gs, Feed Burner, and Superfeedr) to get your content picked up by these services.

Under their existing indexation and clout, these services get your content picked up as a side-effect of indexation.

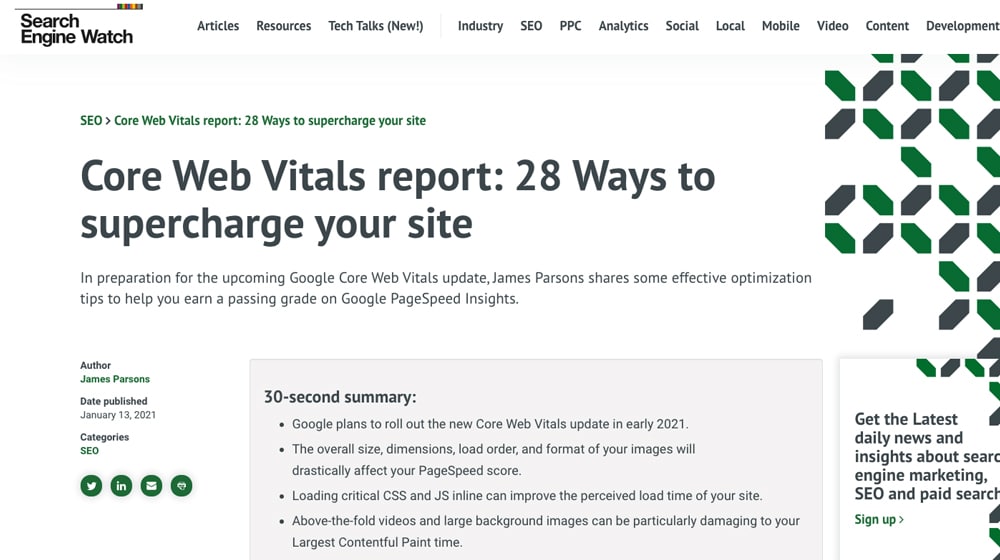

2. Try guest posting and link-building outreach.

The theory behind a ping service is to get your site linked on higher-profile domains. When Google indexes those domains and finds links to your site, they will automatically follow and crawl those links and the pages they point to. Then, they'll go ahead and crawl your site.

You can do the same thing with added SEO value by performing link outreach and guest posting. These serve to get Google to look at your site, but they also build content, thought leadership, and citations, as well as backlinks your domain can use.

In particular, Google pays attention to both Facebook and Twitter. They don't use a direct "firehose" integration anymore, but they still crawl and index as many sites as possible. So, if your content gets posted and shared a lot on social media, Google has a higher chance of picking it up.

Twitter is the easiest to use for this purpose.

4. Maintain an active blog.

Blogging is one of the best things you can do for a site, and I'm not just saying that because I'm biased as a blog content marketer. The simple fact is that Google gives preferential treatment to sites that keep themselves updated with content. If you can produce decent quality content regularly, you give Google a reason to keep checking your site for updates. If they know you publish a new post every Monday, they'll check every Monday or Tuesday for that new post and update it right away.

The trick is, while this can help you keep your site up to date, it isn't going to help Google index you from scratch. They don't know they need to check you regularly until you've been publishing content regularly for a while, after all.

Sometimes it can take a while, and in some cases, it can take weeks or even months - especially if your site is brand new or you don't update it very often. Regularly creating new content will train search engines to crawl them more frequently, so this will be less of an issue for you in the future.

So, there you have it; the best ways to get Google to reindex your website, no matter why you need them to do it. I recommend the sitemap and URL inspection methods, combined with regular blogging, to establish both need and consistency in indexation. Once those steps are crossed off your list, you shouldn't have to worry about indexation again. If your content is high quality, your website structure is solid, and your sitemap is submitted to Google, the only thing left to do is wait.

30 Second Summary

30 Second Summary

April 17, 2022

Our site is still quite new so we'll try submitting a sitemap and see if that works for us. Thanks a lot!

April 29, 2022

Hey Shane!

Glad this helped you! If you haven't yet submitted your sitemap, that will speed things up.

I wouldn't try re-submitting it if you've already submitted it to Google; it won't speed anything up.

The exception is if Google had trouble reaching your server the first time they tried fetching it. In that case, deleting and re-submitting it is a good idea.

December 26, 2022

Great article, this helped me get indexed

December 29, 2022

Thanks Angel, congrats! I'm glad to hear that.