Will Google Reverse Their Stance on AI Content in 2025?

AI is the hottest topic in marketing, SEO, tech, business, and pretty much everywhere else. It's proving to be a hugely disruptive technology in ways both good and bad. It's responsible for some people making millions of dollars and other people being denied medical care. It's a technological reflection of human nature in capitalism; the goal to exploit and come out on top, but leveraged in a different direction than those urges are usually aimed.

Setting aside business uses of AI on the back end, casual use of AI as a meme or gimmick, and narrow AI focused on solving specific problems, let's drill into marketing, SEO, and content production. After all, I run a content marketing agency, this is squarely within my wheelhouse. I've also been around the block for a while, and I've been through several life-altering changes to the way Google works over the years.

So let's talk about it. Why is AI content a big deal, what is Google's stance on it, and where do I think it's going in the next few years?

I've made some bold predictions for 2025 and beyond, but I need to lay the groundwork to get to them first!

Why AI Content is a Big Deal

AI content is a huge deal in marketing because it is effectively a tool that lets you save vast amounts of time and money that would be spent on creating content.

Without AI, you would have to:

- Do keyword and topic research to find opportunities worth spending your limited time and money to target.

- Do research and develop a content brief to outline what you want out of a piece of content.

- Hire a freelance writer or send the brief to a paid employee.

- Wait for that content creator to do their own research and produce a piece of content.

- Go back and forth with edits as necessary.

- Work with a graphic designer or buy stock photos to add images to a post.

- Publish the content and send it through your promotional process.

Every single blog post would have to go through at least that process. In many cases, there are other people involved, there may be more back-and-forth, there may be interviews or unique research, and more. This is effectively the baseline.

With AI:

- Ask an AI-powered system to give you a list of keywords and receive hundreds or thousands of them in minutes.

- Pick a keyword from the list and ask the AI to give you an outline for content, or, in some cases, just ask it to create content on that keyword outright.

- Have a different AI system (or even the same one, now) generate some images to go along with it.

- Feed it into your blog, hit publish, and go back to step 1.

What used to be days or weeks and involved multiple people is now the job of one person and a piece of software, which can (using an API) repeat the process hundreds of times per day.

If your mind is already screaming at you about all of the problems with this, well, don't worry; so is everyone else's except the people selling the AI API access.

One thing I don't think people talk about enough when discussing AI in content, though, is how much of it centers around one company. No, not OpenAI; I'm talking about Google.

All of this is, in a way, Google's fault. Not because they made the AI (though their own AI isn't much better) and not because they haven't cracked down on it, but because none of it would be effective, necessary, or would even really work without Google.

AI content's value comes, almost entirely, from that content being able to exist on websites as a way to deliver ads, promote affiliate links, push a storefront or a service, or otherwise be monetized. Without that monetization channel, it's just noise. And those monetization channels effectively only work because of Google directing traffic to those sites.

Now, sure, this isn't the only repercussion of AI content. Amazon, for example, has a similar, if quieter crisis happening with their immense saturation of AI-generated books that are dull and lifeless. YouTube is already starting to get hit with the same phenomenon.

In a way, these AIs can only exist because of the value they're given by centralized content platforms. But that's the world we have to live in. Fantasizing about a decentralized internet is, for now, still a fantasy.

But I digress. Google has a significant responsibility in its position as governor of SEO and holder of 90% of the global search market share. So, what are they doing about it? Is AI content here to stay, or is it just spam waiting to be washed away?

What Is Google's Stance on AI Content, Anyway?

Google, you might be surprised to learn, doesn't actually have a policy (yet) specifically on AI content.

Everything they have to say about it can be found on the page titled Google Search's guidance about AI-generated content in their developer section.

This post boils down to a few key points.

- Google wants to reward high-quality, valuable, trustworthy content, no matter how it is produced.

- Google doesn't mind automation and views AI as just another automation tool.

- Google is increasingly pushing E-E-A-T and warns that raw AI output doesn't have that E-E-A-T value.

If you read through this and find it to be kind of frustrating, don't worry; you're not alone. Google is essentially trying to ride the middle ground, trying not to say AI is bad across the board (especially because they're producing their own AI), but also trying to avoid encouraging the kinds of people who would tell an AI to write 10,000 blog posts for them this week and publish them all.

Throughout this whole post, there's one specific quote I want to draw attention to.

"For example, about 10 years ago, there were understandable concerns about a rise in mass-produced yet human-generated content. No one would have thought it reasonable for us to declare a ban on all human-generated content in response. Instead, it made more sense to improve our systems to reward quality content, as we did."

Think about this for a bit; I'll come back to it later.

The Problem(s) with AI Content

There are a lot of problems with ChatGPT, LLMs in general, and AI used for content marketing. I'm going to touch on the biggest ones, but there's really only one that's relevant to Google, and I'll explain why.

Ethics in Training and Development

The first significant issue is the ethical issues surrounding the training and creation of an LLM. It's pretty widely known at this point that the major AI systems (ChatGPT for text, Midjourney for images, etc.) were created on the back of massive amounts of no-permission use of intellectual property, ranging from published books to works of art to blatantly illegal content like CSAM and private medical records.

The companies behind these technologies essentially hoovered up all the data they could find from any source they could get their hands on.

This is an ongoing fight. Some people want to use copyright to defend the content being used in training, though this could have knock-on ramifications to things like fair use, parody and satire, and the like, so it's a tricky line to walk. Others want to just be able to opt out, though at this point, the technology, the models, exist; the cat is out of the bag, and there's no easy way to strip out individual contributions from the models. It's that "move fast, break things, ask forgiveness rather than permission" attitude that so often hurts people as it goes.

Sadly to say, Google doesn't much care about the ethics behind the models being used. They might pay lip service to it for Gemini, but they don't have the authority or desire to reach out and try to tell other companies what they can and can't do in their operations. That's a matter for courts and governments.

Damage to Employment and Careers

Another concern is that these tools are putting people out of work and destroying livelihoods without providing any alternative. And that's true! If you go take a look at any of the "content mill" sites like Textbroker, you see a fraction of what you used to. Thousands of freelance content creators – largely writers but also graphic designers and artists – suddenly find it incredibly difficult to find work. Many have abandoned the field.

A secondary problem here, and one that we likely won't actually encounter for another few years, is that it damages the pipelines of skilled employment and development. There's still room for high-end content production, the bespoke, deeply-researched, uniquely-voiced, powerful content created by experts. The AI systems can't quite reach those heights, at least not without a skilled human guiding, revising, and reviewing the output. But when all of the "entry-level" jobs are gone, where are those skilled workers going to come from?

On the one hand, any new technology development will inevitably have this kind of fallout. We don't have lamplighters, or telephone operators, or water carriers, and many formerly mass skilled occupations have been reduced to hobbyists, like blacksmiths and weavers.

On the other hand, when a societal shift makes a group of skilled workers obsolete, we often try to support them with job training and opportunities, such as how we've built up job training and resources for coal miners as we try to kill the use of coal. No one has done anything like that for freelance writers, and I rather doubt anyone will.

Google, again, doesn't have to care about this. It's not part of their purview; it's just an ongoing change to the world and one they have to navigate as much as we do.

Factual Accuracy and Hallucinations

Another issue comes from AI hallucinations. One thing about LLMs is that they don't have any inbuilt way to understand knowledge or facts. What they report as factual may change from query to query, and it often reflects – and amplifies – counterfactual stereotypes.

AI hallucinations are bad, but for the same reasons any misinformation is bad, with the primary difference being that a human spreading misinformation may be doing it maliciously, while an AI has no intent behind it. Indeed, humans hallucinate and get things wrong as much as AI, though in different ways and for different reasons.

Google cares about this one! But it's not actually any different. When content has factual inaccuracies in it, it may lose value in terms of E-E-A-T, or it may not. After all, there are a lot of high-ranking authority sites publishing content that gets things wrong through bias, lack of information, or intentional and deliberate misinformation. Google wants to provide useful information, but it wants to avoid being an arbiter of fact. If Google starts enforcing what is and isn't factual, it will open them up to a massive world of liability if they ever get anything wrong, which they inevitably will.

AI Content Made for Search

The biggest problem with AI content that Google does care about and does address is content made en masse for the purposes of gaming the search engine results. When a site takes a keyword, spins up 250 variations of that keyword, has an AI create 250 blog posts of interrelated content, each targeting one of those keywords, and publishes all of them, well. It's abundantly clear that it's meant to saturate the search results with that domain for that keyword.

It's also pretty easy for Google to detect. Never mind using AI detectors; Google can tell when a domain is registered and publishes hundreds of pieces of content out of nowhere, or if an older site that published once a month suddenly published 50 times a week, or whatever. They don't even need sophistication to identify that kind of abuse. Of course, they do have that sophistication, so they can detect even the people who go a little out of their way to hide it.

Google's one firm line in the sand is to penalize anyone who abuses their algorithms, especially if it's to exploit search results to make themselves money rather than to provide value to the people doing the searching. It's pretty critical that they do this, because the moment Google loses enough trust that they breach the trust thermocline, it's effectively all over for them. We're seeing that now with Twitter/X, a site that a few years ago you would have thought would be thriving forever. Nothing in this world is forever.

Will Google Reverse Course?

No.

There's a reason I say this so unequivocally, and it's this: most people don't know what course Google is on.

The default assumption is that Google's development of their own AI, and their lack of public statements condemning AI content, means that they support it, tacitly or overtly. After all, they wouldn't be shy about slamming a ban hammer down if they thought it was bad for business, right?

Well, not exactly. Google is on course to bring that hammer down in the relatively near future, but to understand why, you need to look at the past.

How Google Has Handled Other SEO Strategies and Abuse

Consider the following.

Keyword Density

Many years ago, when Google was a relatively young search engine, they relied primarily on keywords and links to evaluate any kind of site, rank them for relevance using methods we would view as quaint and simple today, and serve them to visitors.

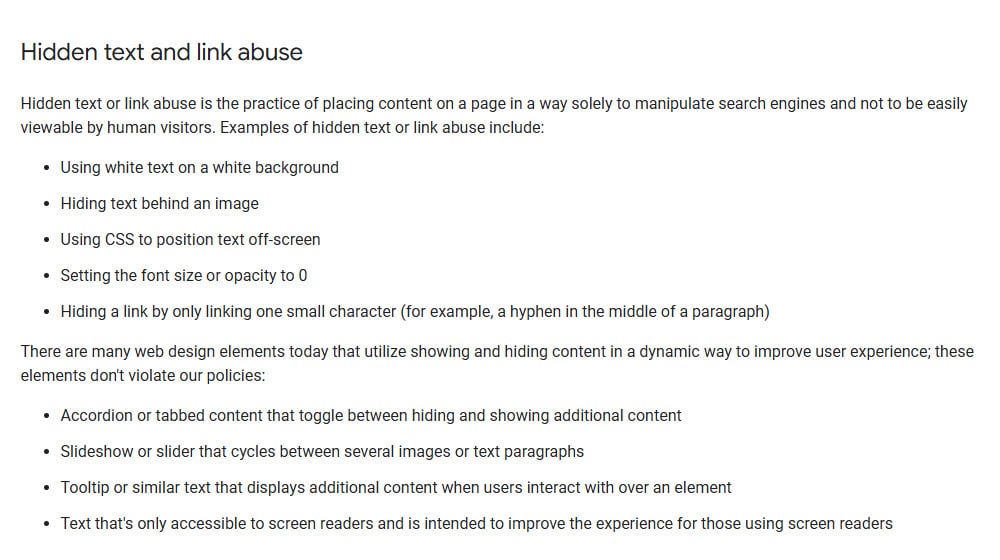

Marketers realized quickly that when specific keywords were mentioned, content could rank for those keywords. They further realized that the more they mentioned those keywords and the more keywords they mentioned, the more they could rank. People started using all sorts of methods to add more keywords, from injecting them in nonsensical sentences mid-content, to hiding them in size 0.1 font at the bottom of a page, to making them the same color as the background, to hiding them behind images.

Of course, Google caught on and penalized those kinds of strategies, but they can't just ban anything that hides text outright; after all, shutter-window FAQs, slide-on CTAs, and even things like video transcripts embedded in metadata are all valuable. So, it took them a while to address the obvious problem, but when they did, it very quickly made it clear what was and wasn't allowed.

Guest Blogging

Guest blogging is a famous example in SEO of a strategy that worked, until it didn't, until it did.

SEO runs on links. Backlinks are extremely important. So, finding ways to get more backlinks is extremely important. Marketers have tried pretty much everything over the years, but one of the more enduring strategies was guest posting.

In short, guest posting is the idea of reaching out to another site and saying "hey, I'm an expert in X, I'll write about X, Y, or Z for you, all I'd ask is that you credit me with a link." Site owners get free content, guest posters get a valuable backlink, and a win is a win.

This was, in short order, abused to hell and back. Low-quality sites threw the same low-quality content to anyone who could be tricked into publishing it. Content would be spun or outright stolen. Content would be overloaded with links. People would pay for links, which is a big no-no in Google's terms. All of it culminated in Matt Cutts' famous "Decay and Fall of Guest Blogging" post, which killed the strategy in the eyes of millions of marketers.

Guest blogging still exists and is still effective, if you do it right. But, what was effective, and what is right, changed.

Parasite SEO

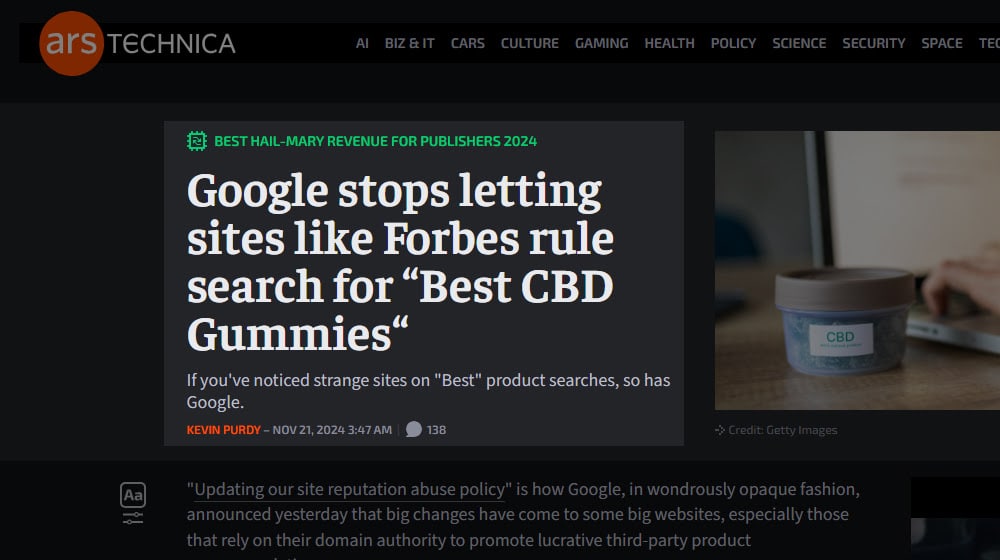

Another big, and much more recent, example is called parasite SEO. Throughout the internet, there are big name sites that are consistently in the top search results for, seemingly, everything. Big sites like Forbes, CNN, Fortune, Time, and others. It makes sense, right? They're huge authorities and hubs of journalists, reporters, and experts.

What started to happen, though, was those sites started weaponizing this position. They viewed themselves as too big to fail, and so they let other people blatantly violate Google policies. They essentially created unmoderated, user-contribution segments of their sites, which could cover topics the site themselves normally wouldn't. Two of the biggest examples are CBD gummies and pet insurance.

Both of those are called out explicitly in examples when Google last month pushed an update to their "site reputation abuse policy", which is the rule against doing exactly what those sites have been doing. Their recent update trashed entire massive subsections of those sites, with Forbes seeing a 43% drop in traffic to their section, and Time losing 97% of theirs.

Google isn't afraid of big changes when those changes are warranted.

Article Spinning and Rewriting

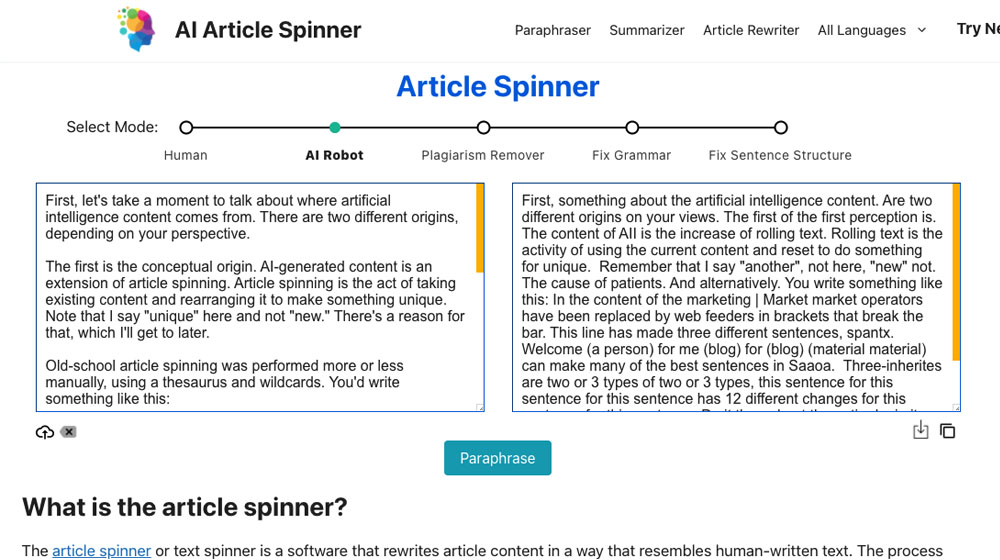

Another big example is taking existing content and rewriting it or spinning it into something "new" for your own site.

Article spinning was the practice of taking a piece of content and swapping out specific words and phrases for synonyms. You could have a framework of a post, but through changing the specific words used, "spin" up to dozens or hundreds of variations of that post, which could then be used to cover keyword variations, be submitted to directories for backlinks, or used as fodder for guest posting.

Old-school article spinning is dead, because of Google's integration of natural language processing, a sort of distant precursor to generative AI. Google doesn't necessarily index a blog post as-is, but they can fuzz up the text with an understanding of synonyms. This makes spun content actually look functionally identical to other spun versions of the same content and makes it immensely easier to spot.

So, the step beyond that was to pay mass amounts of human freelancers to rewrite articles to sound different but contain all of the same information and points in more or less the same order. "Content mill" content of this sort thrived because it was much harder to detect, right up until… hold on, let me grab that quote.

"For example, about 10 years ago, there were understandable concerns about a rise in mass-produced yet human-generated content. No one would have thought it reasonable for us to declare a ban on all human-generated content in response. Instead, it made more sense to improve our systems to reward quality content, as we did."

What happened around about ten or so years ago? A little algorithm update meant to address low-quality and copied content. It is, perhaps, one of the single biggest changes to search – and consequently, the internet as a whole – in the history of the internet. It was Google Panda.

Now, here we are once again. Article spinning is happening, in a one-step-further abstracted way, using AI to do the spinning using huge blocks of human knowledge rather than specific source materials, but otherwise for the same purposes and to the same outcomes.

It's like poetry; it rhymes.

My Predictions for the Future of AI in SEO

Google doesn't move fast anymore, at least, not their search and webspam teams. They can't afford to move fast and break things anymore. They aren't agile.

Google takes their time. They carefully consider and analyze a situation. They have dozens of the best minds in the business thinking about all of the edge cases and secondary fallout of any decision they make. Even still, they don't always get it right! However, I do think that Google recognizes the threat that unrestricted AI content represents.

Consider, if Google doesn't do anything and lets AI content run wild, what happens? It saturates every corner of the internet and very quickly turns everything into noise. Since AI has no concept of fact, there would be no concept of trust or authority anymore. People would, eventually – possibly longer than they should but eventually – stop using it. In a way, it's already happening. How many people search YouTube, Reddit, or TikTok for their information now, instead of searching Google?

How many people ask ChatGPT directly, since after all, if the results everywhere are just ChatGPT output, why not get it from the source instead?

Google has to do something. It's just a matter of what and when. I have a few predictions.

#1: Use of AI will become a more significant spam signal in the future.

Right now, "uses AI" isn't necessarily itself a spam signal. But it does run afoul of several other spam signals.

- Mass publication, which implies a lack of consideration.

- Spun content filters. AI with similar inputs produces similar outputs, which just like spun content, can be detected when you have the bird's eye view Google does.

- Lack of trust. When the "author" behind content is ChatGPT, what expertise, authority, or trust is represented?

Google doesn't necessarily need to make an AI detector and roll it into their algorithm, though nothing says they won't. AI output, if it's not heavily edited and reviewed, fact-checked, and overseen by humans, already trips enough spam flags that it's going to be caught. All Google has to do is tighten the bolts a little until they strike the right balance.

#2: A Panda-like event is coming.

The section above was meant to illustrate that, throughout the last two decades of SEO, Google has repeatedly encountered new technologies that dramatically change how content is created and weaponized against them, and they are absolutely no strangers to bringing the hammer down when they're sure it will do what they want it to do.

I fully, 100% expect the "AI Panda" event to happen. Just not yet.

#3: Google is actively harvesting information to set up their update.

Now, obviously, Google is always harvesting data and tweaking its algorithm. That's nothing new. They are very likely also testing potential anti-AI anti-spam tweaks internally to see what kinds of tertiary repercussions they would have. I believe they're working on it.

But, in the meantime, are you seeing what's happening out there? We've already had a couple of big shifts in the sites that rank using AI. First, it was the proliferation and subsequent demotion of sites that spun up out of nowhere to saturate a query. Recently, it was parasite SEO, which wasn't entirely AI but was exploited by AI.

Not to get too into conspiracy thinking, but this sounds a whole lot like bait. They trim off the bottom tier of the obvious garbage… and then they watch. They see what those sites and those webmasters do to adapt, so they can harvest that data, and roll it into their eventual updates.

Meanwhile, you can bet that Google has unfettered API access to every major AI algorithm so they can reverse-engineer everything they can. They're approaching the problem from every angle they can.

When the AI Panda event happens, it's going to be a huge change to content marketing once again. It's going to catch a lot of people in the crossfire, and it probably – unfortunately – won't undo a lot of the damage that has already been done. But then, Panda also killed off a lot of sites and ended a lot of people's livelihoods.

My advice?

Keep it in mind as you go.

Google isn't going to ban all use of AI unless global governments do what California has been doing and try to put severe limiters or outright bans on it. They're too invested in their own, after all.

But, much like the people who adapted to Panda by writing 1,100-word posts instead of 1,000-word posts and doing the bare minimum, only to be caught by the subsequent follow-up tweaks, this is going to be a change in the way content is evaluated. It's going to be huge, and it's going to cut off a lot of lower-quality, less-reviewed content.

I believe that AI is here to stay, but the way it gets used in marketing content will need to be reined in. If you want to use it, you either need to abuse it, get your pay, and get out before it hurts, or you need to be very subtle and cautious with using it in a way that still has enough human review and authority added to it to be trustworthy.

It's a familiar story, and it's destined to play out once again in the next year or two. That's my prediction.

Comments