Google SpamBrain Recovery: Which Strategies Actually Work?

For decades now, Google has done everything it can to provide the best possible search results for users, no matter what those users are searching for. While the definition of "best" – including for whom the results are the best – has occasionally come into question, what isn't a question is that Google is always on the lookout for attempts to circumvent their rules or game the system to get an artificially good search ranking.

While Google is constantly making changes to its algorithms behind the scenes, now and then, it pushes a larger update, and these updates are generally given names to refer to the overall context and purpose of the update. In the past, many have had names of animals, like Panda, Penguin, and Hummingbird.

Others are just Core Updates with a date attached, and still others have names coined by the people studying their effects.

One of the more recent enduring changes is something called SpamBrain. SpamBrain is a unique part of Google's core algorithm, and it receives updates alongside everything else.

What is it, how does it work, and how can you recover if your site is hit by it? Let's talk about it.

What is Google SpamBrain?

SpamBrain is nothing new. In fact, the original version of SpamBrain was launched in 2018, and it receives numerous regular updates every year. However, its existence, and even its name, were hidden from the public until a webspam report from 2022, where they finally gave it the name we know today.

SpamBrain is billed as Google's AI-powered answer to spam websites. Their use of AI is more in line with Machine Learning, however, rather than the generative AI that people currently think of. Google isn't asking ChatGPT whether or not a domain is spam, so you can set the feats of AI hallucinations to rest.

Essentially, SpamBrain is fed numerous pieces of data about websites, and across the whole of the index, it can determine patterns that are indicative of spammy and low-quality pages. This can then flag these domains to find things that other means of reviewing sites don't catch. Whether it's patterns in usage, in text, or just in link profiles, SpamBrain is able to detect with shocking accuracy pages that constitute spam.

It's not really public knowledge what SpamBrain looks for specifically, and that's probably not even something anyone within Google knows. Many of these machine learning algorithms end up something like a black box, and they're only trusted when their output is verified using statistical sampling, at which point they can be unleashed. SpamBrain has had 6+ years of development, and as such, it's quite well-refined.

It's also worth mentioning that Google generally tunes these kinds of algorithms a little low. They would rather fail to catch certain spam domains and have to iterate later than catch legitimate, high-quality sites and have to deal with that hassle. It sometimes doesn't feel that way – and certainly, there are a lot of people running terrible sites who don't realize it until they're hit and have to confront the reality that their quality isn't up to snuff – but that's often how it works out.

So, while Google doesn't say exactly what they look for with SpamBrain, we know some things based on what they've said in the past and based on the assumption that they're using it to ramp up enforcement of their existing webspam rules.

What Does Google SpamBrain Look For?

In general, SpamBrain looks for sites that bear the hallmarks of, well, being spam.

That can take many forms.

One of the biggest elements SpamBrain checks is link spam. Sites that participate in private blog networks, link wheels and exchanges, link purchasing, and other forms of link spam are more easily detected with machine learning, and Google has publicly stated that SpamBrain helped them catch 50x more spam sites in 2023 than in 2022.

In 2024 news, the March core update brought with it three new additions and changes to their webspam guidelines, which we can assume are now all things SpamBrain can look for. These are:

- Abuse of expired domains. A semi-spam technique was to buy domains that expired but had previously had some ranking, throw a site up on them to take advantage of any lingering SEO value, and funnel that value to target sites, even if the value wasn't relevant to the target site's topic.

- Scaled content abuse. This is essentially Google's "don't use generative AI to spam thousands of thin content pages" algorithm. Since generative AI is very good at making things that sound accurate but contain no real substance or unique value, it's hard for a human review or a simple analysis to catch, but machine learning can identify those kinds of patterns and hallmarks.

- Parasite SEO. This was when a reputable site would sell/rent/lease subpages on their site to unrelated businesses to use to leech off some SEO value. Since it was unlikely that the overall reputable domain would be penalized, this kind of abuse could go unpenalized for a long time.

All of these are additions as of the end of 2023 and the start of 2024 and are almost definitely part of SpamBrain now.

How Does Google SpamBrain Penalize Sites?

SpamBrain can penalize sites in four different ways, as far as we know.

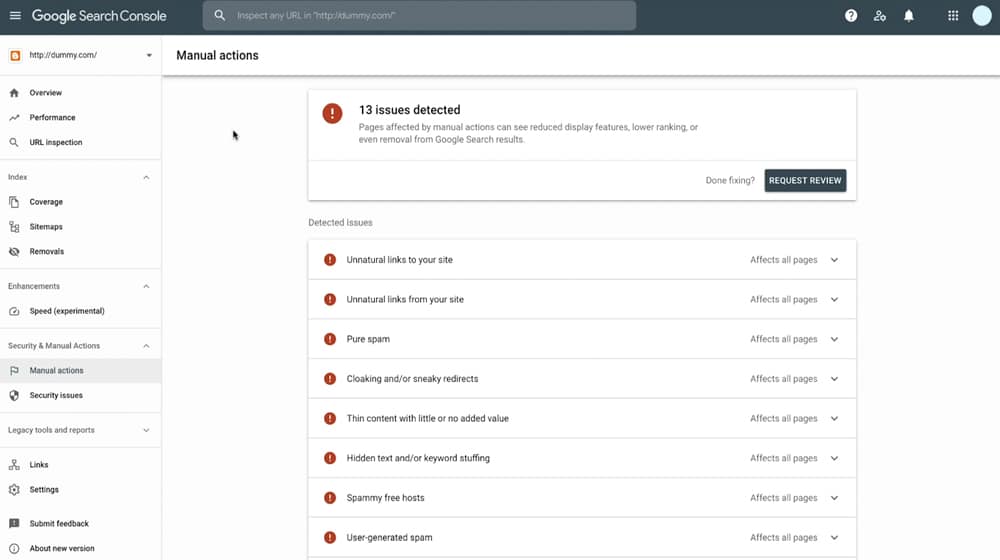

The first and most obvious is flagging the site for review and subsequent manual action. Google's manual actions are the most obvious penalty because they're the only kind of penalty that is actually just that: a penalty. They're a punitive measure taken by Google against sites that generally deserve a chance to fix their mistakes and get back on track. You tend to see manual actions most often on sites that have lingering signs of old spam but have been on the "good side" for years, or on sites that have been hacked and need to recover first, and other such cases.

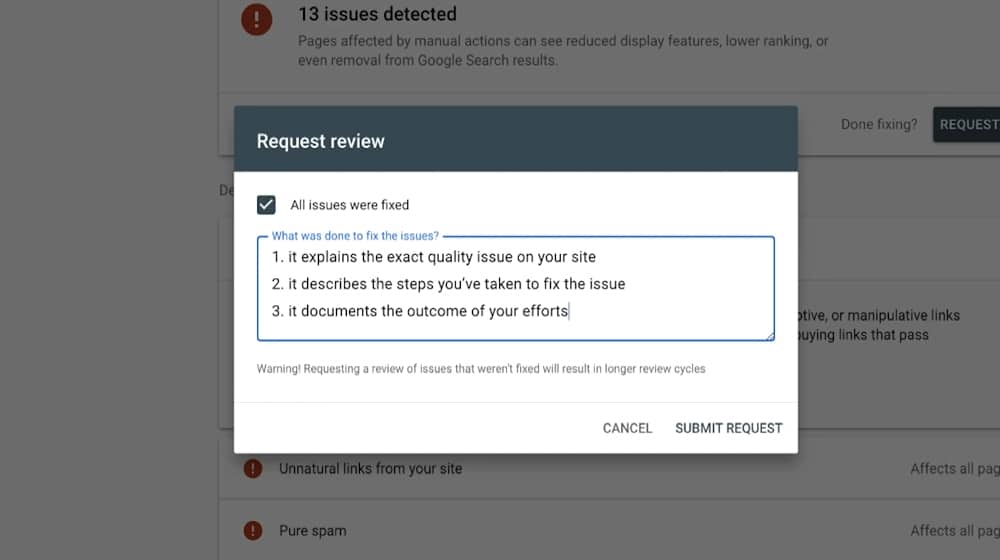

Manual actions are easy to see and, in some cases, even easy to fix. This is because Google tells you in your Search Console if you have a manual action, and if so, what pages are triggering it and how to fix it. They're actually very helpful! You can read more about manual actions from Google directly or from resources like mine here.

The second way that SpamBrain can penalize a site is by preemptively acting as a gatekeeper. Essentially, when Google discovers a new domain or a new page on an existing site, that page needs to be scraped, broken down into component parts, analyzed, and ranked. But before any of that happens, it has to pass a number of filters and checks. Google doesn't want to waste time and resources doing a bunch of analysis on a page that is just a 404, is full of gibberish or broken code, or is obviously spam.

SpamBrain, then, is one of those filters. It acts as a gatekeeper and can check a page to see if it matches any of the signs of spam according to the checks it can perform. Signs of link abuse on the page, signs of it being misaligned with the core domain and using parasite SEO, and signs of its content being scaled and generated content; all of these will trigger SpamBrain, and SpamBrain will filter the site.

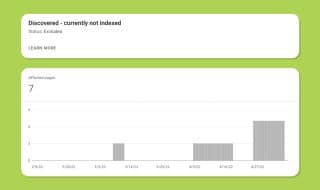

The penalty here is simply no indexation for the page. Google saves on resources by filtering them before they get to the analysis stage, and the webmaster only learns something is wrong by checking their indexation report and seeing pages that aren't indexed at all.

The third way that SpamBrain can penalize a site is by zeroing out the links from that site. If a page is participating in a link scheme, then making it so that the links that the site sends out are worth nothing at all is a perfect solution. They don't risk Negative SEO issues because the links aren't inherently detrimental, but they also don't perpetuate the site's link exploitation because those links don't benefit the sites they link to. The exploitative page simply becomes worthless as far as spammers are concerned.

This is also more or less the hardest to detect. There aren't a lot of easy ways to estimate the link value coming from a page; you can generally only tell if your page is hit by a lot of links pointing to it being removed. In fact, many people feel like their sites have been hit by SpamBrain when, in reality, it's the sites linking to them that were hit, and they were collateral damage.

The fourth way, of course, is simply by penalizing or even deindexing a page. Google does this all the time by balancing the factors that lead to a page's rank, and they'll do it to you if they decide your pages aren't actually as high quality as they initially appeared. A lot of AI spam sites are currently getting caught in this, as they whipped up thousands of thin posts barely better than spun content and assumed that since it wasn't so pattern-based, it wouldn't be detected. They were wrong.

Recovering from SpamBrain

Recovering from SpamBrain isn't simple, and it comes down to identifying why your site was hit, and how. So, here are some steps you can try.

Check and Fix Manual Actions

The first, easiest, and simplest thing to do is check your search console report to see if your site has any manual actions taken against it. Again, these are all well-explained and obvious, so if you have any, it's a relatively simple matter to fix them.

Now, I say simple, but it's often a complex task; it's just not a guessing game as to whether or not you're fixing what Google is taking issue with since they're telling you.

Disavow Bad Links and Build Better Links

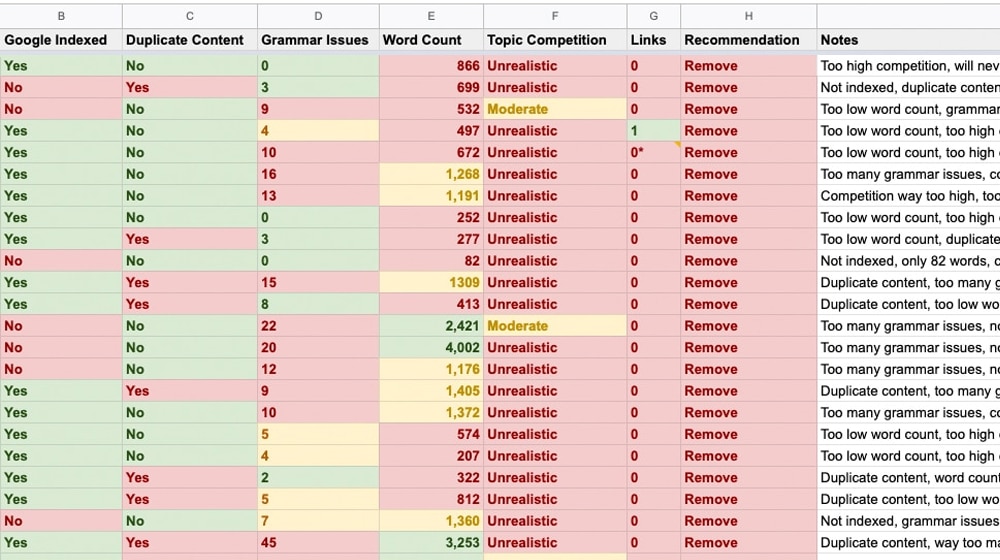

A huge part of SpamBrain is link spam, so one of the best things you can do is a comprehensive link audit.

Start by pulling as complete a backlink report as you can. Audit and assess the links pointing to you, and try to determine if any of them are coming from spam sites. If your rankings dropped because of a SpamBrain update, there's a decent chance it's because you lost link value from sites that tanked because of it. You can disavow the bad links, but honestly, it likely won't help much. The issue isn't that the links are bad; they no longer count. You need to replace them with better links.

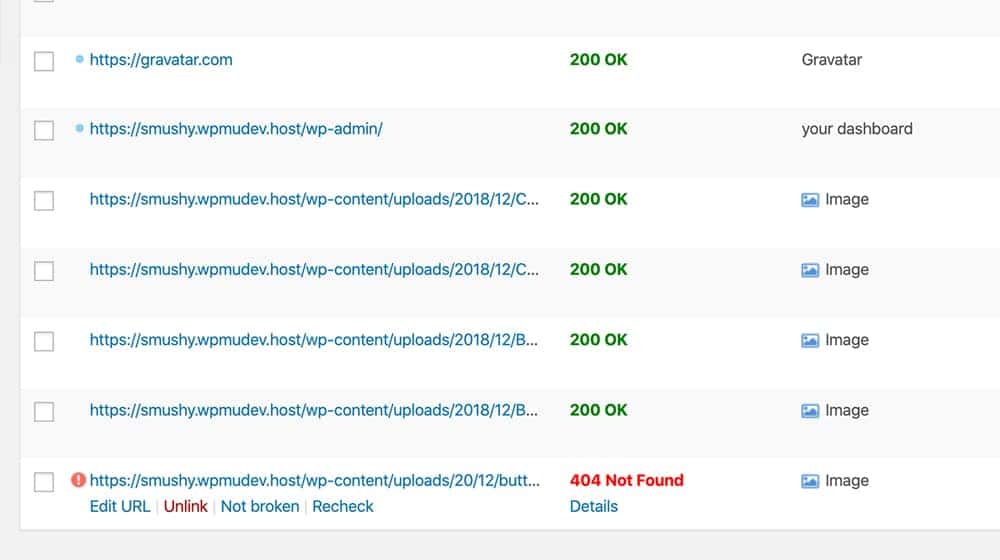

The second step is to audit all of your outbound links. If you've been participating in link schemes, you may have links that are part of that scheme that need to be removed. It's also generally a good idea to check your links at least annually to make sure you aren't linking to broken pages or missing sites anyway. You can use tools like Screaming Frog or Greenflare to do this more easily.

Perform a Content Audit and Fix Generative Content

Possibly one of the most likely recent reasons why a site can be penalized by SpamBrain is their new iteration of scaled content abuse. This was originally meant for sites that, for example, make one generic page, copy and paste it 100 times, and change the names of a city mentioned in it to the top 100 cities in the US. It's functionally spam content because there's no unique, tailored value there.

These days, it's a lot harder to identify this, because it's usually generative AI being exploited to make these hundreds or thousands of posts. Unfortunately, the ML/AI algorithms Google employs can detect patterns much more readily than individuals, and can identify if your content has largely been made by AI.

Unfortunately, there's no simple way to recover from this short of performing a detailed content audit and purging or improving bad content. It's a ton of work, but it's work you'll need to do if you want to recover from a content-focused penalty like that.

SpamBrain is nothing new, and it's certainly here to stay, so you're likely going to see the name come up occasionally throughout Google's core algorithm updates. They're always pushing to iterate on new ways to filter bad and exploitative content, so truly, the best way to recover is to avoid doing the actions that cause penalties in the first place.

Comments