Why Shopify Indexes Empty Pages on Google (With Fixes)

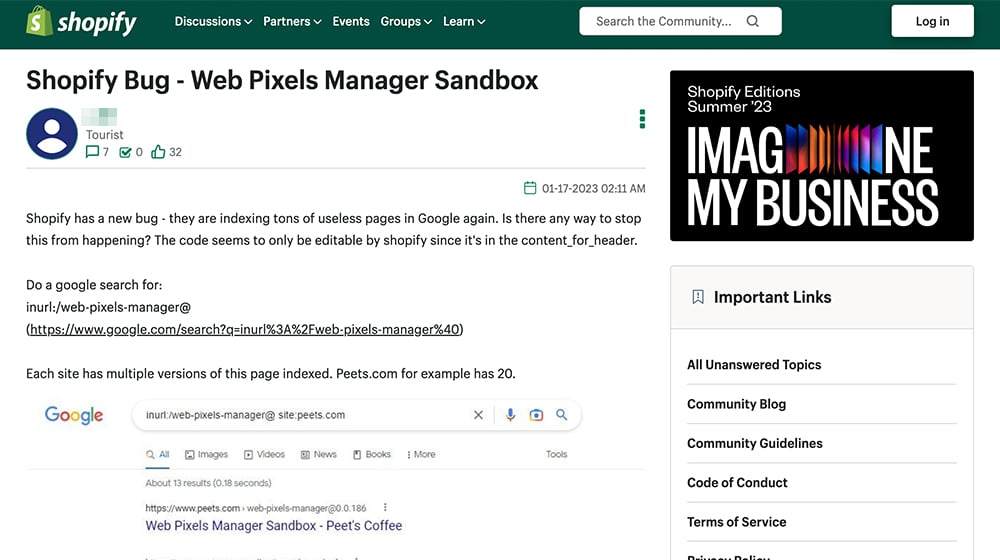

Shopify is a very popular platform for hosting web blogs and stores, but any time you're using a platform you don't fully control, you risk running into issues you can't fix. Recently, one of those issues has been cropping up across Shopify sites.

What's Happening?

Basically, Shopify has a system it uses to manage tracking pixels and other code that monitors user behavior and records it for analytics. Things like the Facebook Tracking Pixel are the main examples, but there are all sorts of tracking codes out there.

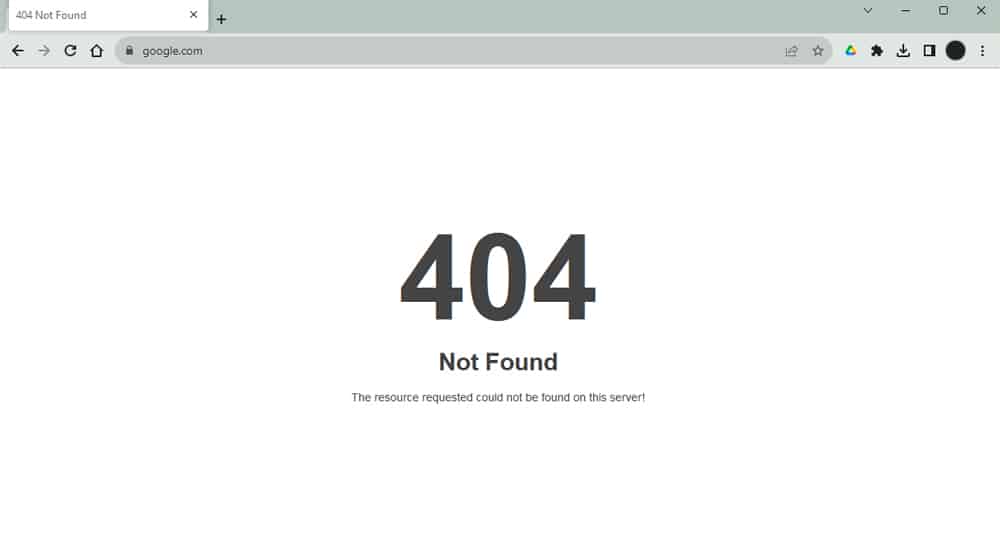

Normally, the system used to manage this code is hidden. Google doesn't see or care about the pages, and users don't either. For some sites, you can't even get to the pages if you wanted to; they go to a 404 if you're not a site admin. Other sites render them as blank pages since everything on them is metadata (which you can see in this thread.)

The trouble is, since this is part of Shopify's infrastructure, they're the ones who control how it's formatted and how it's hidden. When they make a change – like they did recently – they can break the code and the fixes that hide it.

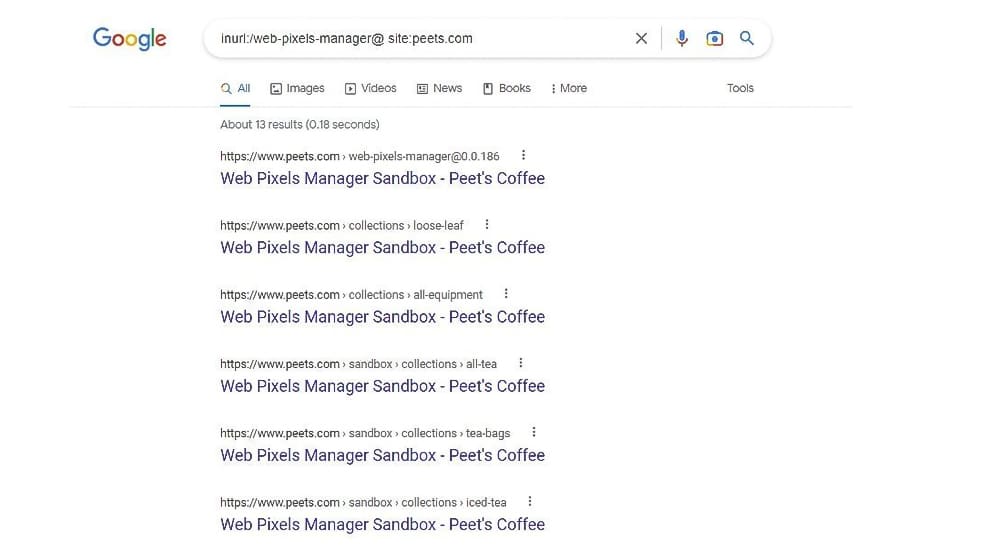

Many Shopify users recently saw a huge spike in the number of indexed pages on their sites because Google started detecting and indexing these pages. They all have URLs like:

- Example.com/[email protected]@##########

- Example.com/[email protected]@#########/sandbox/en-ca/products/

These are both the same thing, just in two different formats. The "web-pixels-manager" format was the old one, and "wpm@" is the new one.

These pages are thin content, at best, system pages that don't offer value to users. Normally, they would be ignored by search engines because they have some directive telling the search engines to ignore them, either in a robots.txt file or in the metadata with a noindex tag.

Unfortunately, well, see for yourself. This site, run on Shopify, has nearly 700 indexed pages that are all WPM pages.

Why is This a Problem?

Three reasons.

First, it's unsightly and skews your analytics and other metrics. If you're the kind of person who keeps an eye on the number of indexed pages on your site to make sure you're getting picked up and indexed properly, having all of these additional pages cluttering up the index is a mess, and it's hard to deal with.

Second, it's an infosec issue. Any time system pages are available, you risk some kind of data breach, malicious attack, or other problem cropping up. I don't know that there's anything an attacker can do with a bunch of URLs that go to 404 pages, but you never know; maybe someone could pull tracking IDs and mess with your analytics or something else inconvenient. That's if you've redirected them, too. More on that later.

Third, and most importantly, it's an SEO issue. Suddenly, Google indexes a bunch of pages on your site, but all of them are thin/no content pages with no value to users. What are they going to do?

Normally, we can trust that Google is smart enough to understand that these pages aren't really intended to be indexed or counted and that they'll just be ignored by the algorithms. But I've seen a lot of cases in my time where Google doesn't do the smart thing, at least not right away, and a site can take a pretty significant hit because of it in the meantime.

There's a lot we, as site owners, do to smooth things over to avoid letting Google misinterpret some issue or mistake and penalize us for it.

Don't get me wrong; this isn't a Shopify-exclusive issue. I ran into something similar myself a while back when Yoast pushed an update that accidentally allowed a bunch of WordPress system pages to be indexed. This is just the kind of thing we all have to deal with.

The tricky part is that, unlike WordPress, where you can do whatever you want to the system running the site, Shopify keeps that all under lock and key as much as they can. It's a simple platform for people who don't want to have to dig in the guts of a website, and while that's fine for a lot of people, it also means there's a lot of room for errors like this to run unchecked.

What's the Solution?

There are two general solutions here.

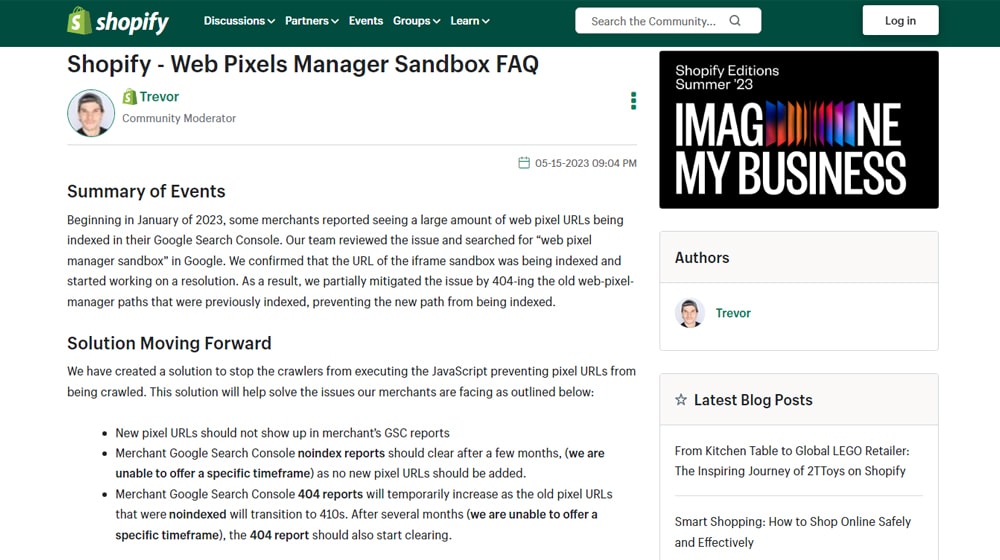

The first is to wait for Shopify to deal with it. They will! They've fixed problems like this in the past, and they'll fix this one. It'll take a bit of time for Google to sort it all out, though. That's because Google is already pretty slow with indexing a lot of sites, and they deprioritize thin content pages like this since they're unlikely to be meaningful or change.

In fact, Shopify tried to fix the issue, which is actually part of why it's an issue in the first place. The correct way to fix this kind of problem is to use noindex and nofollow tags in the metadata for the pages. That way, when Google stumbles across them, they know to ignore them.

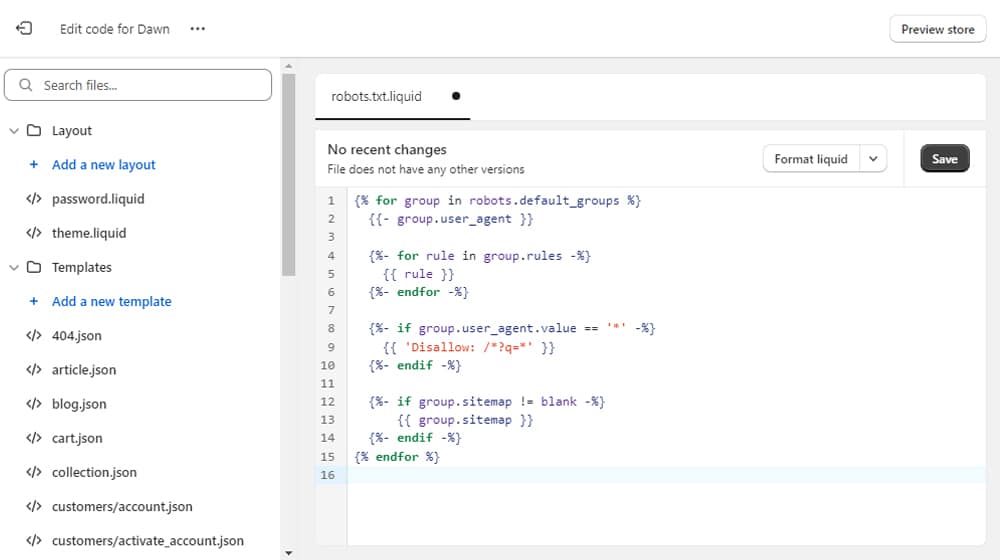

Shopify changed something in their code, and it wiped these directives, which allowed Google to index the pages for a lot of stores. Then, they put the directives back in. But, they went too far and also added a block in robots.txt.

For those unfamiliar, a robots.txt file is a file in your site's root directory that tells Google and other search bots what to ignore throughout a site. It's a good way to block entire subfolders and URL structures from appearing in searches.

See the issue here?

Shopify added the directive on the pages to remove them from the index… but they also added the robots.txt directive telling Google not to go to the pages. So, Google never goes back to the pages to see the new directive to remove the pages. They're stuck until Google's index for those pages expires, and they have to review them.

Shopify will figure it out. In fact, by the time of this writing, it's entirely possible they already have. The issue is that it will take time for Google to figure it out and appropriately re-index your site.

The second solution is to take some steps to fix the issue and prevent similar problems from happening again.

Steps You Can Take

While you're waiting for Shopify to fix things or Google to index the fix, what steps can you take? Thankfully, you have a few options you can use individually or all together.

Edit Shopify's Robots.txt File.

Shopify allows you to edit your site's robots.txt file, but they don't really like it. They label it an unsupported edit, so if you change the file around and need to get support from Shopify later, they won't give it to you.

Unfortunately, this isn't just a plain txt file you can edit. You have to go through your themes and edit the robots.txt.liquid file, which works a bit differently and doesn't reflect everything Shopify might want to have in their general robots.txt directives.

The general steps are:

- Log into your Shopify Admin control panel.

- Click Settings.

- Click Apps and sales channels.

- Click Online Store.

- Click Open Sales Channel.

- Click themes.

- Click Actions.

- Click Edit Code.

- Click Add New Template.

- Choose Robots.

- Click Create Template.

- Make your customizations and save the template.

To figure out how to edit this template and what code is supported, you can refer to the Shopify developer page for it here.

Since this doesn't give you complete control over your site's robots.txt file (and Shopify can still remotely update it), you might want something that gives you a little more control. The Shopify app Robots.txt Editor can help here. It's a one-time purchase for $19, and it basically just unlocks your robots.txt file for normal editing. It separates it from your theme files, so you can have a robots.txt file independent of your site theme and divorced from the normal theme-based editing.

Here's what a fairly robust robots.txt file looks like. It doesn't have the WPM edits in it, though; for that, you'll need to put your own versions in.

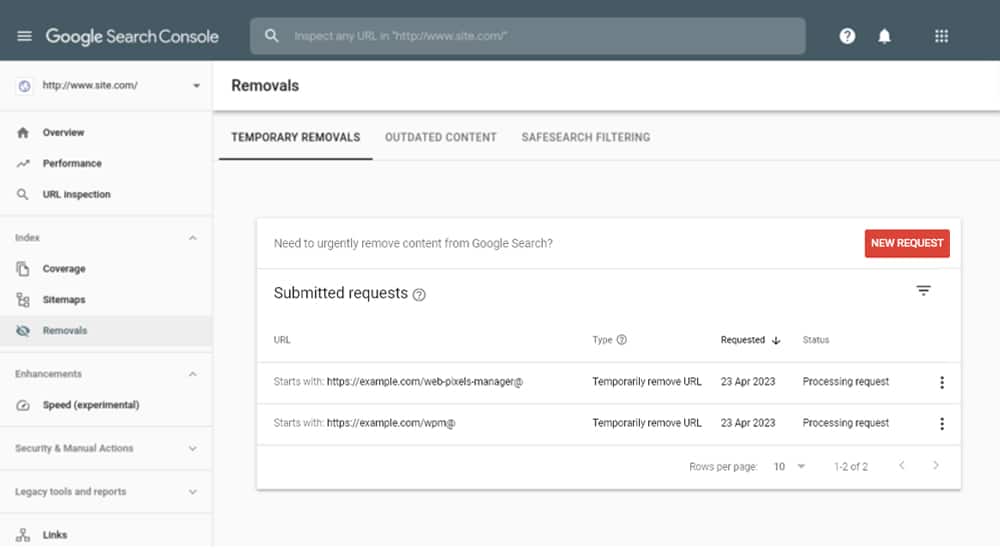

Remove Bad Pages from Your Google Search Console.

While you wait for Shopify, robots.txt, and indexing to sort itself out, you can force Google to remove pages from your index. To do this, you need to have claimed your site in the Google Search Console.

The tool to use is the URL Removal Tool.

All you need to do here is remove URLs that match the two patterns:

- Example.com/web-pixels-manager@

- Example.com/wpm@

You don't have to go through every page that has a WPM URL and list them all; you can use wildcards here. Just make sure you don't go too broad and accidentally request the removal of your whole site!

Google's URL removal tool doesn't remove your content from their index, nor does it remove the listed URLs from your Search Console list of indexed URLs. What it does is remove those URLs from the search results. Google knows they exist but won't show them in their search results, even if someone does a site search with the URL parameter to find them.

Normally, a URL removal lasts about six months before Google will start putting the pages back in their results. In this case, though, those pages should be removed once the noindex commands are identified and obeyed, so by the time six months rolls around, you won't have to worry about it.

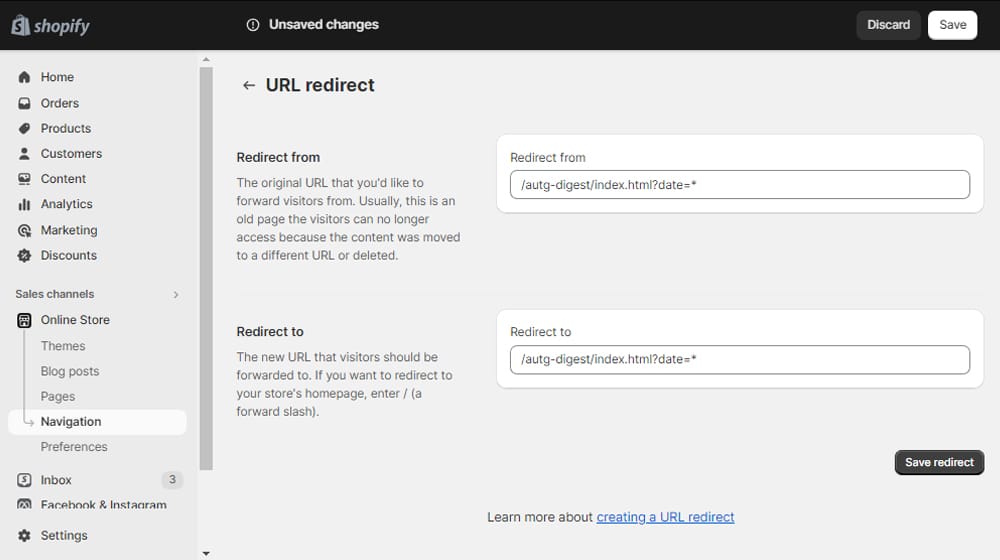

Use a Page Redirect Plugin to Redirect the URLs.

In the Fangamer example I listed above, while all of the WPM pages still show up in the search results, clicking on them just brings you to a 404 page rather than the page with all the sensitive metadata on it. So, adding redirects can be a great way to help handle the public-facing side of the problem.

Shopify allows you to add a redirect for a URL using this process:

- Log into your Shopify admin control panel.

- Click Settings.

- Click Apps and sales channels.

- Click Online Store.

- Click Open Sales Channel.

- Click Navigation.

- Click URL Redirects.

- Click Create URL Redirect.

- Under "Redirect From," plug in your WPM URL.

- Under "Redirect To," choose a 404 page or your homepage.

- Click to Save the Redirect.

Unfortunately, while Shopify will let you redirect a lot of URLs at once, you need to have them all in a CSV and use their bulk uploading tool. If you have hundreds or thousands of WPM pages you want to redirect, that's going to be a heck of a project.

Fortunately, as with many features Shopify is missing, this one also has apps to solve it for you. There are a bunch of different apps you can use to redirect using wildcards and modifiers to even make it contextual. Combine this with a custom 404 page or a redirect to a sales page or another CTA, and you can turn an error into a bit of value.

Give It Time

Shopify has fixed this issue, but it seems to keep cropping up every few months, at least as far as comments in this thread go.

My advice, if you don't want to tinker with your site robots.txt or mess with mass-removing URLs through Google, is to just let it ride. It's a frustrating issue, and waiting on Google to re-index your site can be a tedious process, I know. But if you're not seeing a hit to your rankings or SEO metrics, then it's not really a problem, right? So, that's what I'd check. If your rankings, traffic, or other metrics have dropped since the issue started, then by all means, take as many actions as you can to fix it.

Otherwise, it's not a problem until it becomes a problem, and if Google can sort it out on their own, you don't need to pay for apps and mess with code. Unless you want to, of course! I always advocate in favor of knowing how to root around in the guts of your site to fix issues and streamline performance.

As always, if you have any questions about anything I touched on in this article, please feel free to let me know. I'll gladly help you out however I can.

Comments